Interests

- Human Augmentation

- Mixed Reality • Virtual Reality

- User Interface • Interaction Design

- Bionics • Robotics

- Cognitive Science • Neuroscience

Skills

- C • C# • JavaScript • Python

- HTML • CSS • TeX • Git

- Unity • Arduino

- Processing • Jupyter

- Technical Drawing • Mechanical Machining

- SolidWorks • Pro/ENGINEER • AutoCAD

- Pencil & Marker Sketch • Gouache Painting • Prototyping

- Rhinoceros • Grasshopper • KeyShot • V-Ray • 3Ds Max

- Photoshop • Illustrator • Premiere • Sketch • Axure

Education

Keio University

- Human-Computer Interaction • Media Design

- Thesis: Visual Expansion by Animal-Inspired Visuomotor Modification

- Supervisor: Prof. Kouta Minamizawa; Co-supervisor: Prof. Kai Kunze;

- English Courses, 3.84 / 4.33

Imperial College London,

Royal College of Art,

Pratt Institute

Tokyo, Japan → London, UK → Brooklyn, US

Beihang University

- Industrial Design • Mechanical Engineering

- Outstanding Graduate, GPA: 3.6 / 4.0

Research Experience

Unveristy of Auckland

Auckland, New Zealand

- Director: Prof. Mark Billinghurst

- Developed applications of remote collaboration and access on mobile platform.

- Designed and advised the prototypes for commercialization of XR technology.

Keio University

Tokyo, Japan

- Director: Prof. Kouta Minamizawa

- Research in the fields of haptic sensation, virtual reality, human enhancement, telexistence, etc.

- Developed experiments, prototypes and applications for researchprojects.

Work Experience

China Mobile, Shanghai Research

Beijing, China

- Researched on solutions in transportation industry including internet of vehicle, autonomous driving, vehicle to everything (V2X), etc.

- Managed the product line, and engaged in the process of business analysis, design refinement, factory manufacture, and software development.

- Designed and developed new patented products of on-board electronic, both to business and to customer.

Lenovo Research

Beijing, China

- Conducted user research towards various types of consumer electronics, including laptop, tablet, phone, etc., in User Research Center.

- Developed the preliminary design of the next generation smart devices.

Publications

- Lichao Shen, MHD Yamen Saraiji, Roshan Lalintha Peiris, Kai Kunze, and Kouta Minamizawa. 2020. Visuo-motor Influence on Attached Robotic Neck. In ACM Symposium on Spatial User Interaction 2020 (SUI ‘20). # pending

- Lichao Shen, MHD Yamen Saraji, Kai Kunze, and Kouta Minamizawa. 2018. Unconstrained Neck: Omnidirectional Observation from an Extra Robotic Neck. In Proceedings of the 9th Augmented Human International Conference (AH ‘18). ACM, New York, NY, USA, Article 38, 2 pages. DOI: https://doi.org/10.1145/3174910.3174955

- MHD Yamen Saraiji, Roshan Lalintha Peiris, Lichao Shen, Kouta Minamizawa, and Susumu Tachi. 2018. Ambient: Facial Thermal Feedback in Remotely Operated Applications. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (CHI EA ‘18). ACM, New York, NY, USA, Paper D321, 4 pages. DOI: https://doi.org/10.1145/3170427.3186483

Awards

-

Best Demo Award (AH ‘18)

2018 Augmented Human 9th International Conference . Feb 2018. Seoul, Korea. -

Design Excellent Prize

Gifts of Fuyang Design Competition. Jan 2013. Zhejiang, China. -

Scholarship of Academic Performance (continuous 3 years)

Beihang University. Dec 2013, 2012, 2011. Beijing, China. -

Exhibition: Auto China 2014

13th Beijing International Automobile Exhibition. April 2014. Beijing, China. -

Exhibition: City, Museum, Tokyo Design Exhibition

Dec 2013. Tokyo, Japan. -

Patent: A System of Vehicle to Everything

Aug 2020. China, Beijing. # Pending -

Finalist

Red Dot Design Award. Dec 2013. Tokyo, Japan.

Research Works

Limitless Oculus

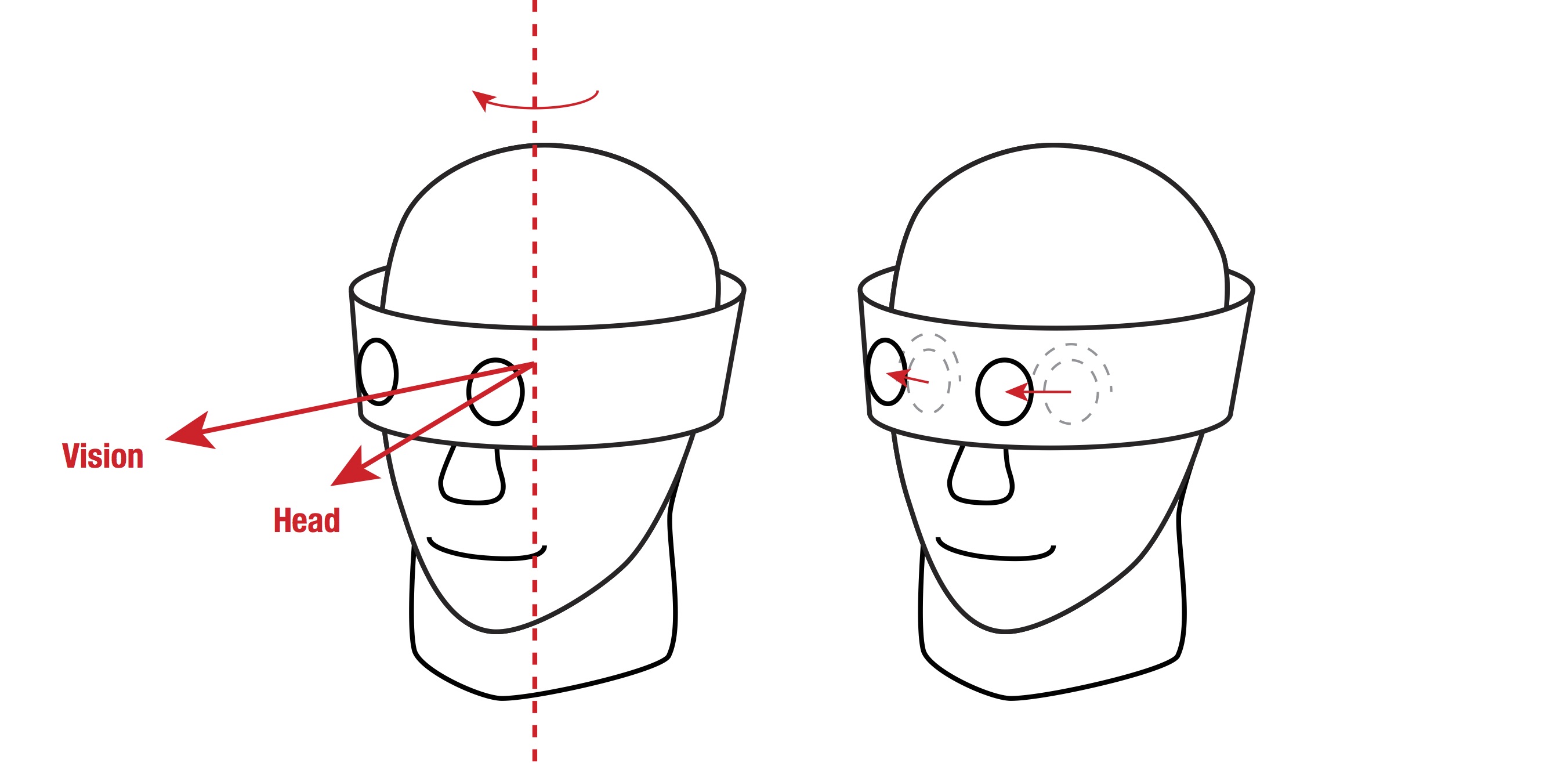

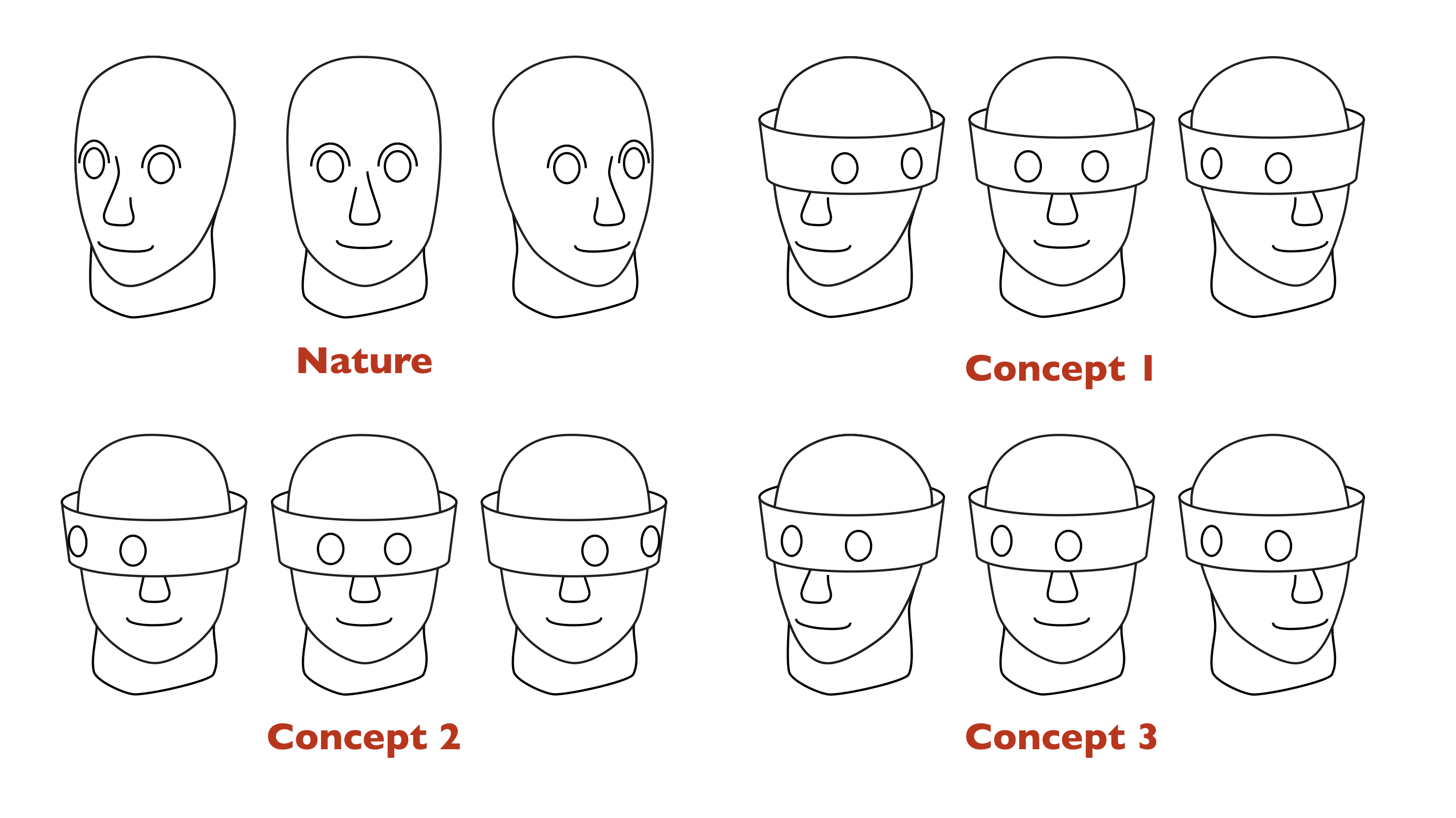

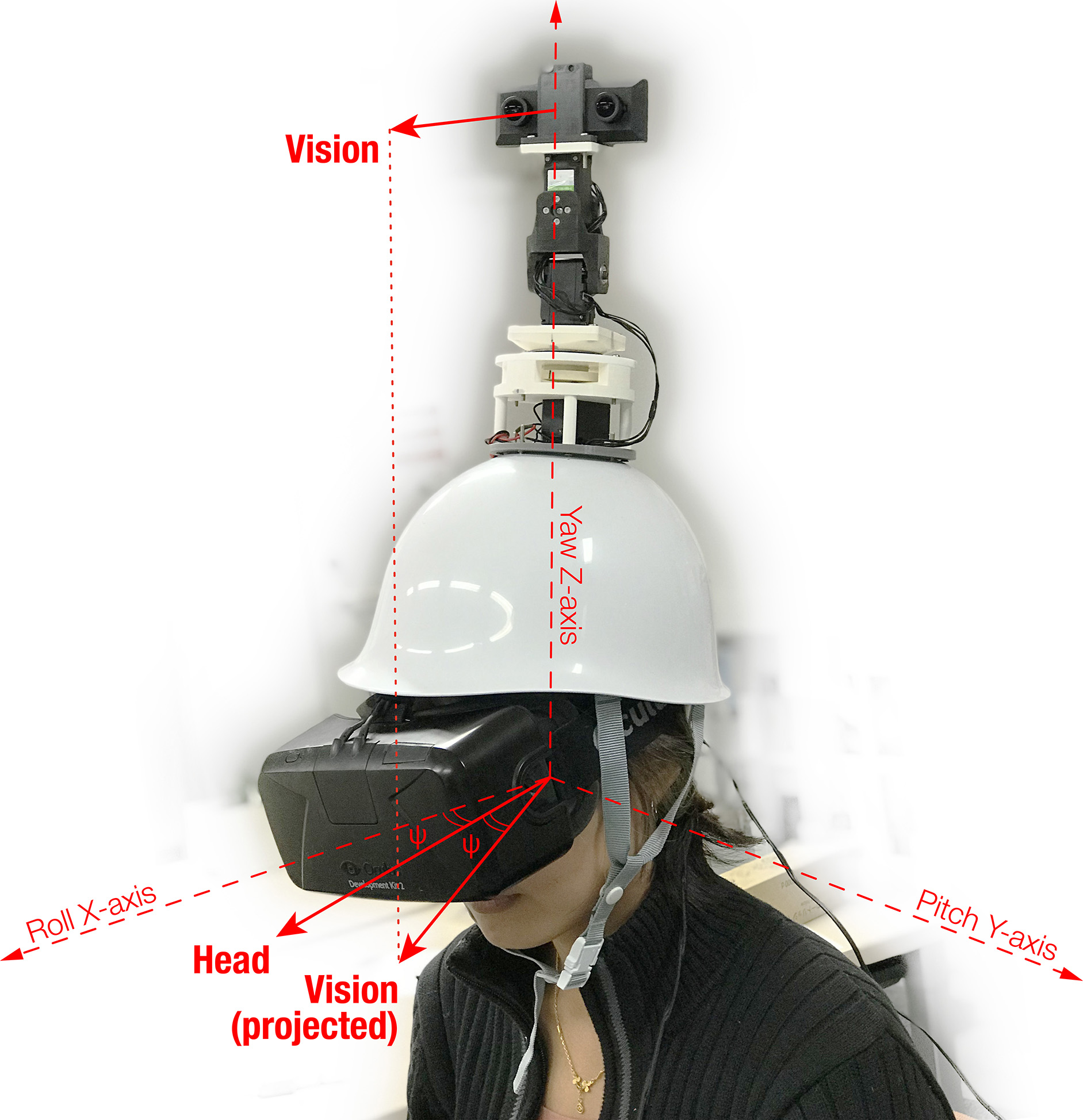

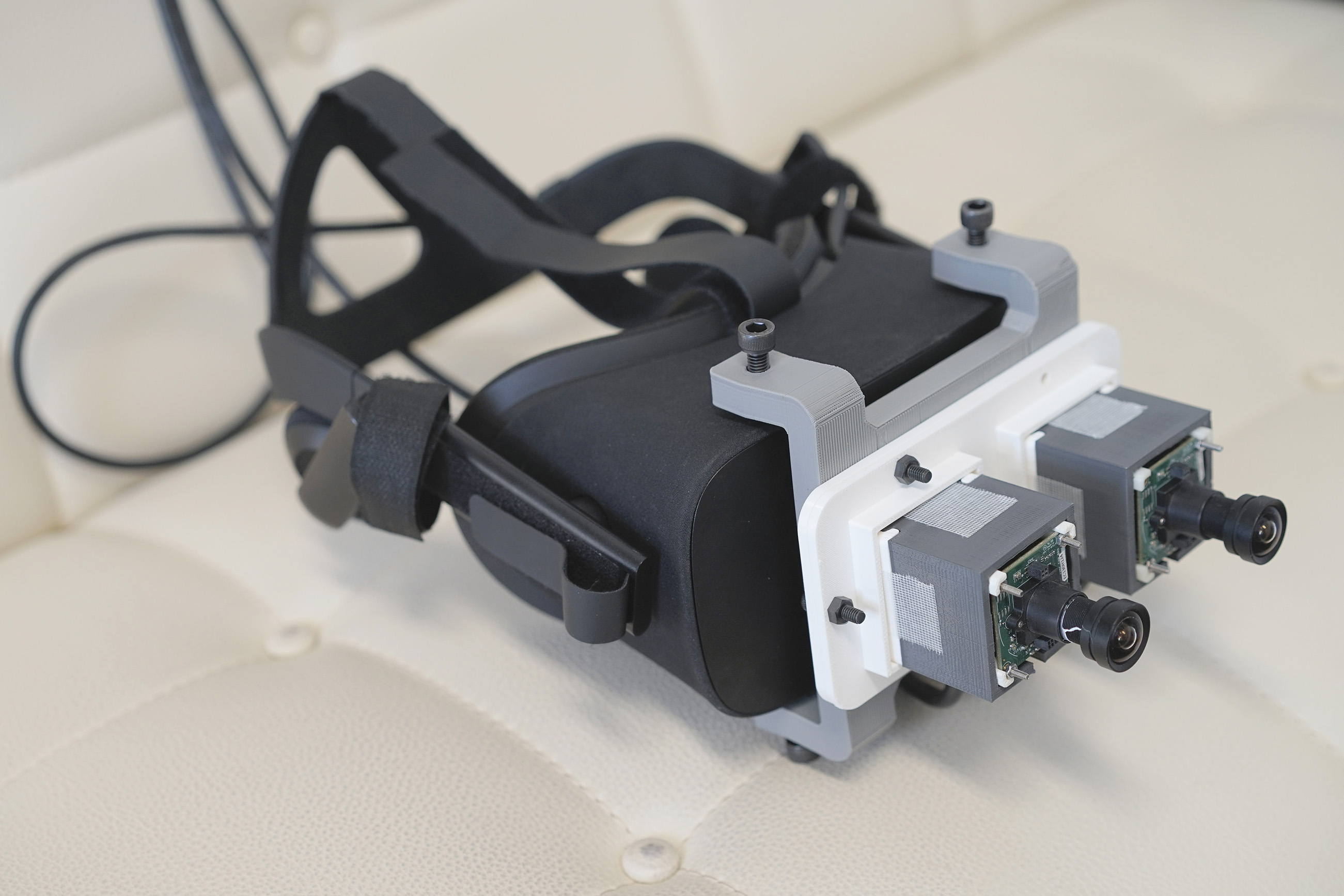

Humans are trapped in their limited senses. The human vision was relatively narrow and weak, comparing to the variety of animal superior abilities. Through my investigation, it was figured out that the human musculoskeletal systems, especially the neck, were a factor limiting the spatial range of vision. In order to help human overcome part of those limitations, I proposed the animal-inspired concept called Limitless Oculus. That was to substitute or modify the original visuomotor coordination of human, with a certain artificial mechanism. The mechanism, orienting the vision, was modified and designed to be programmable and customizable. The benefits aimed to promote the flexibility of the spatial orientation of vision, and hence achieve visual expansion and substitution. Besides, the concept had more possible applications with a variety of modifications and mechanisms. The method had physiological and cognitive influence, so it can not only be a tool but also change human mind. Two prototypes were presented. Then I conducted experiments to evaluate the performance of the prototypes, and prove the feasibility of the concept. The result indicated that the spatial range of vision was expanded, while the speed of the scan and response could also be augmented in a certain range.

Supervisor: Kouta Minamizawa, Sub-supervisor: Kai Kunze, Co-viewer: Ishido Nanako

Unconstrained Neck

Due to the narrow range of motion of the neck and the small visual field of the eyes, the human visual sense is limited in terms of the spatial range. We address this visual limitation by proposing a programmable neck that can leverage the range of motion limits. Unconstrained Neck, a head-mounted robotic neck, is a substitution neck system which provides a wider range of motion enabling humans to overcome the physical constraints of the neck. Using this robotic neck, it is also possible to control the visual/motor gain, which allows the user to thus control the range and speed of his effective neck motion or visual motion.

Ambient

Facial thermoreception plays an important role in mediating surrounding ambient and direct feeling of temperature and touch, enhancing our subjective experience of presence. Such sensory modality has not been well explored in the field of telepresence and telexistence. We present Ambient, an enhanced experience of remote presence that consists of a fully facial thermal feedback system combined with the first person view of telexistence systems. Here, we present an overview of the design and implementation of Ambient, along with scenarios envisioned for social and intimate applications.

Eye-in-Hand

In natural condition, human eyes receive almost the same visual stimulus, but that each eye registers different content could lead to new visual experience. We present a wearable device that provides two separate visual capture at the position of both hands, i.e., upper limb is utilized to control the orientation of each individual vision. The whole system blends the two image sources, while avoiding the binocular rivalry, and thus enables the user to observe on two different visual information simultaneously. It improves the flexibility of vision orienting, and alters the visuomotor coordination drastically. We then examine user’s adaptability to the split vision and the by-product sickness.

Bug View

Past telepresence and telexistence research focus on humanoid and duplicating human sensation. Different from those, Bug View focus on mapping the human bodily consciousness to a non-human subject with totally different body schema, and here it is a spider robot. Its proposal is to provide an immersive experience of "being a spider", and smooth operation of the robot. The human-robot mapping consists of sensory mapping and kinematic mapping. We provide several feasible methods and build prototypes. Then we plan to conduct the experiment to find out the best way to provide the highest degree of presence. At last, the potential application of this concept has been discussed.

Design Works

Live!VR

Live!VR is a start-up project about Japanese anime industry, located in Tokyo. It is designed for new-form anime content broadcast and live-stream, with the technology of real-time mixed reality. The key figure of Live!VR is Virtual Youtuber (VTuber, usually virtual anime character), and the commericial content involves derivative, fashion, tourism, advertisement, promotion, etc. The achieved main features include motion control (by Kinect), emotion control, lip-voice sync, real-scene overlay and multi-player.

- Participated in commerical planning, user research, and product design.

- Wrote the program with Unity and C#, and Set up the device and animaiton, as the technology partner.

Re-design Projects

Alipay - City Service

Revit

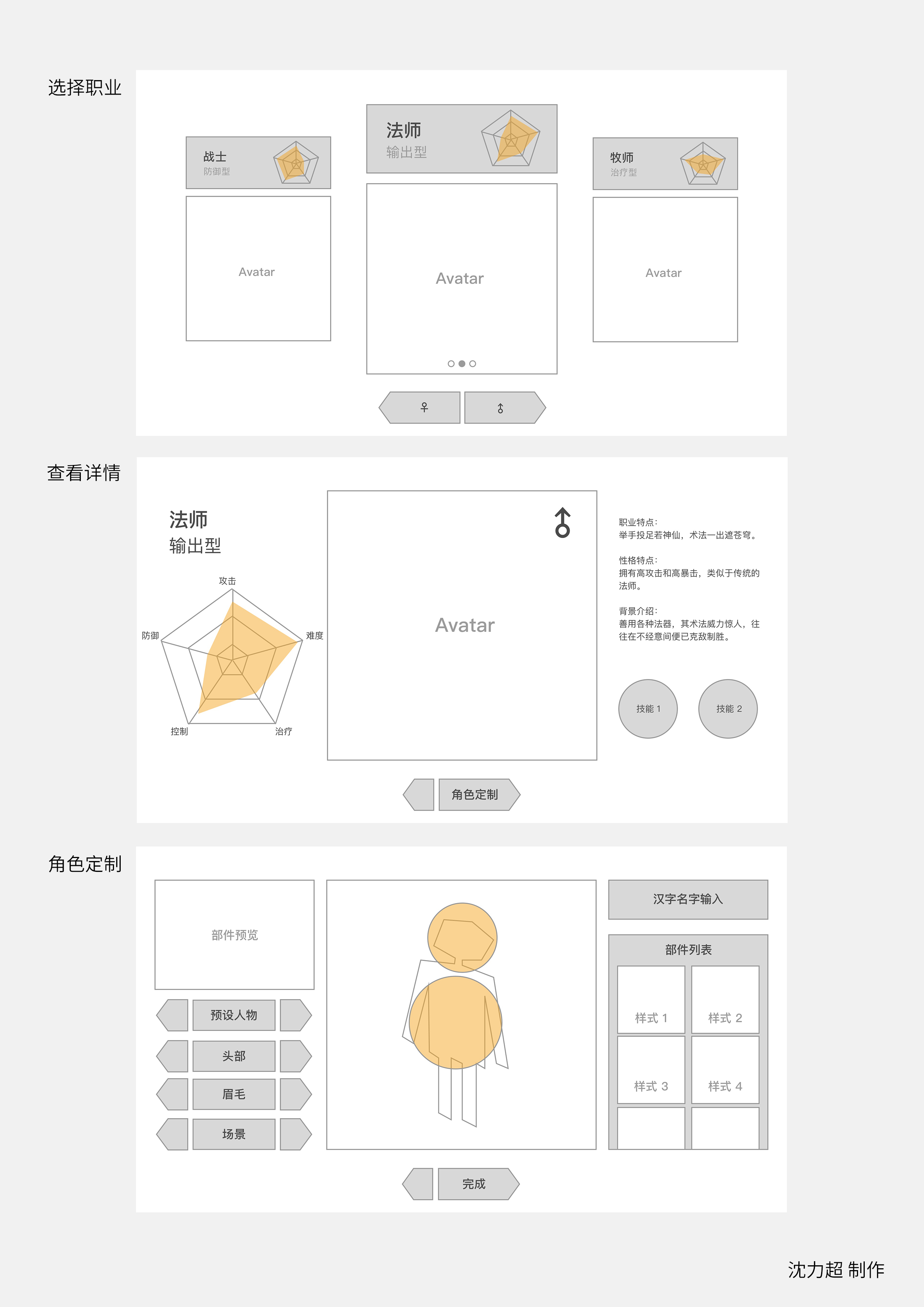

Game - Create A Role

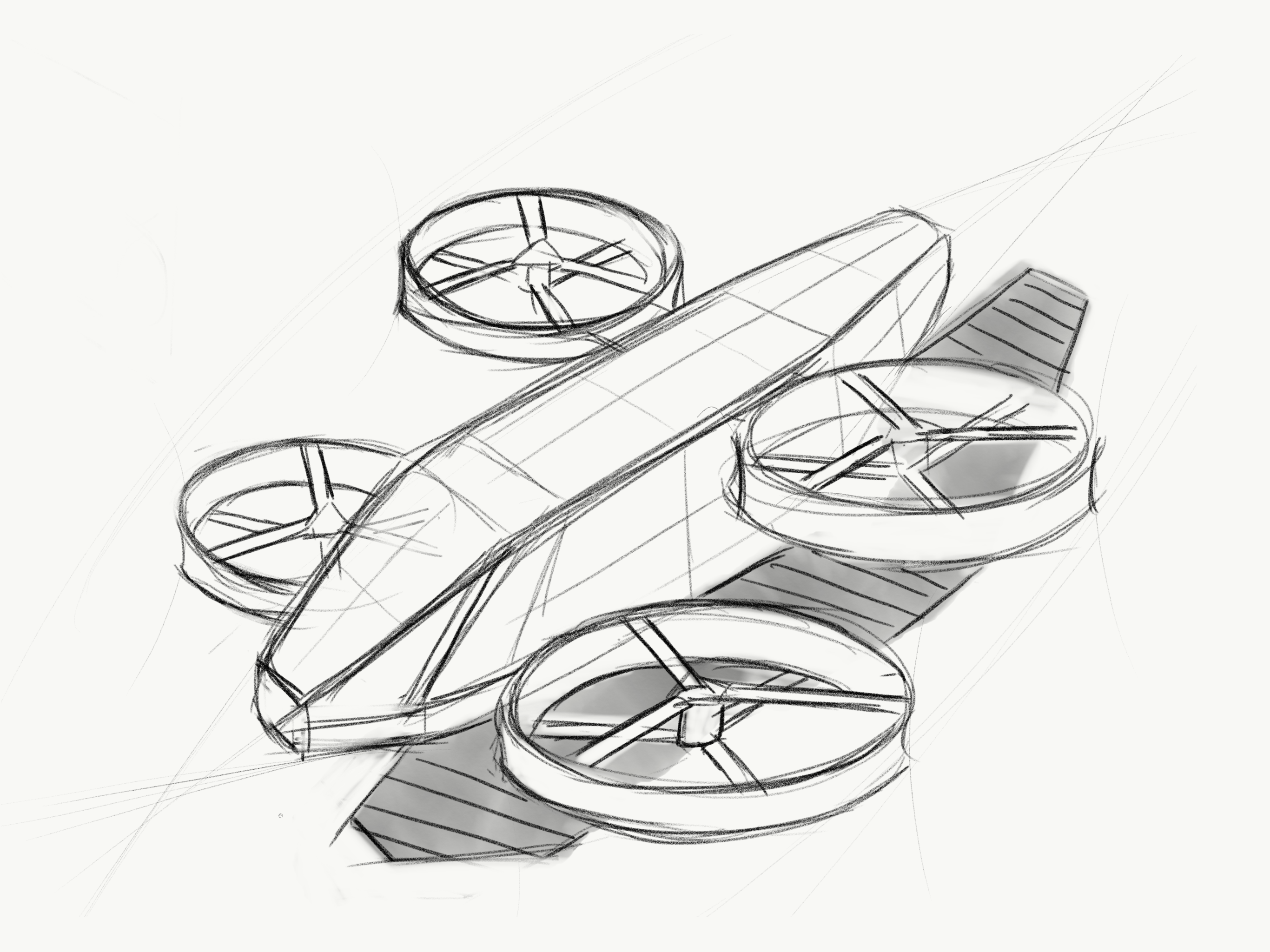

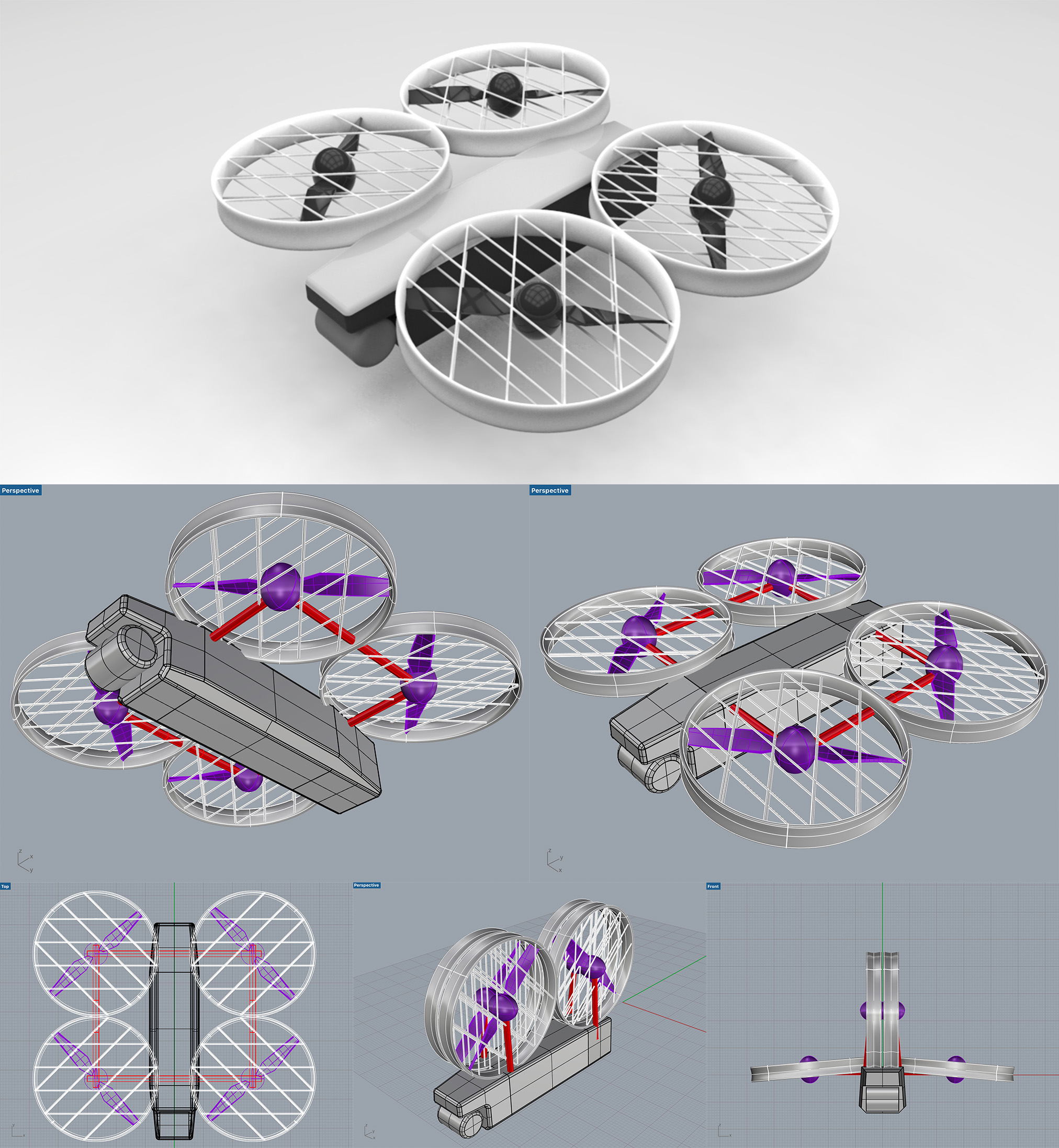

DJI Drone

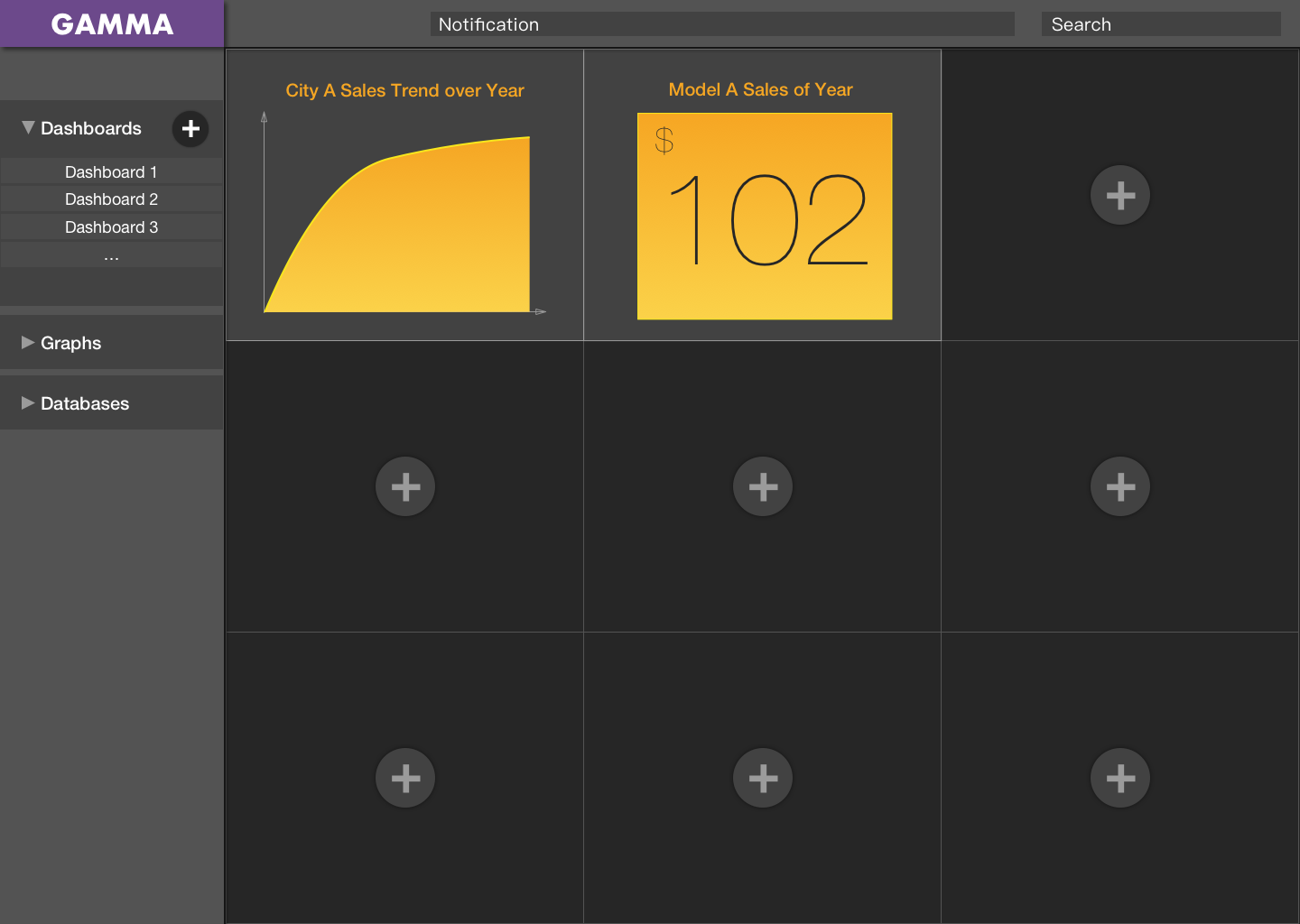

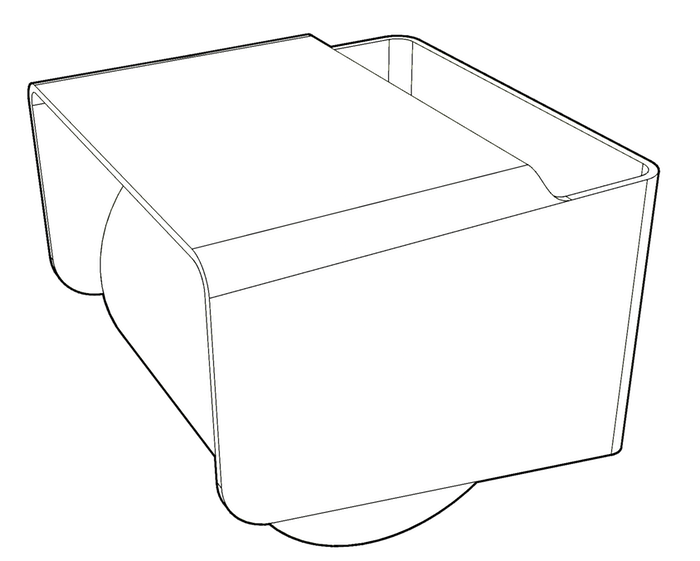

Retail Dashboard

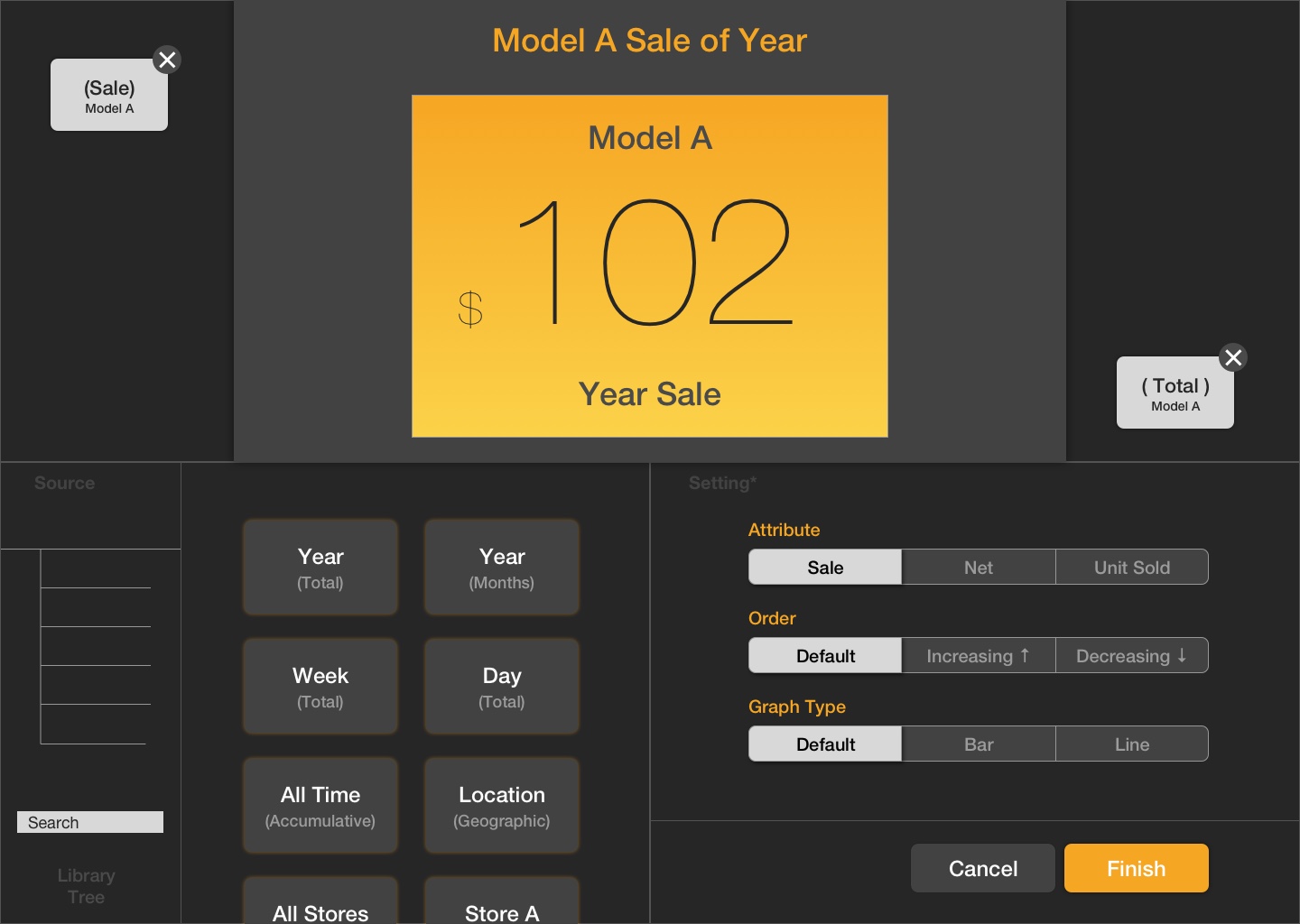

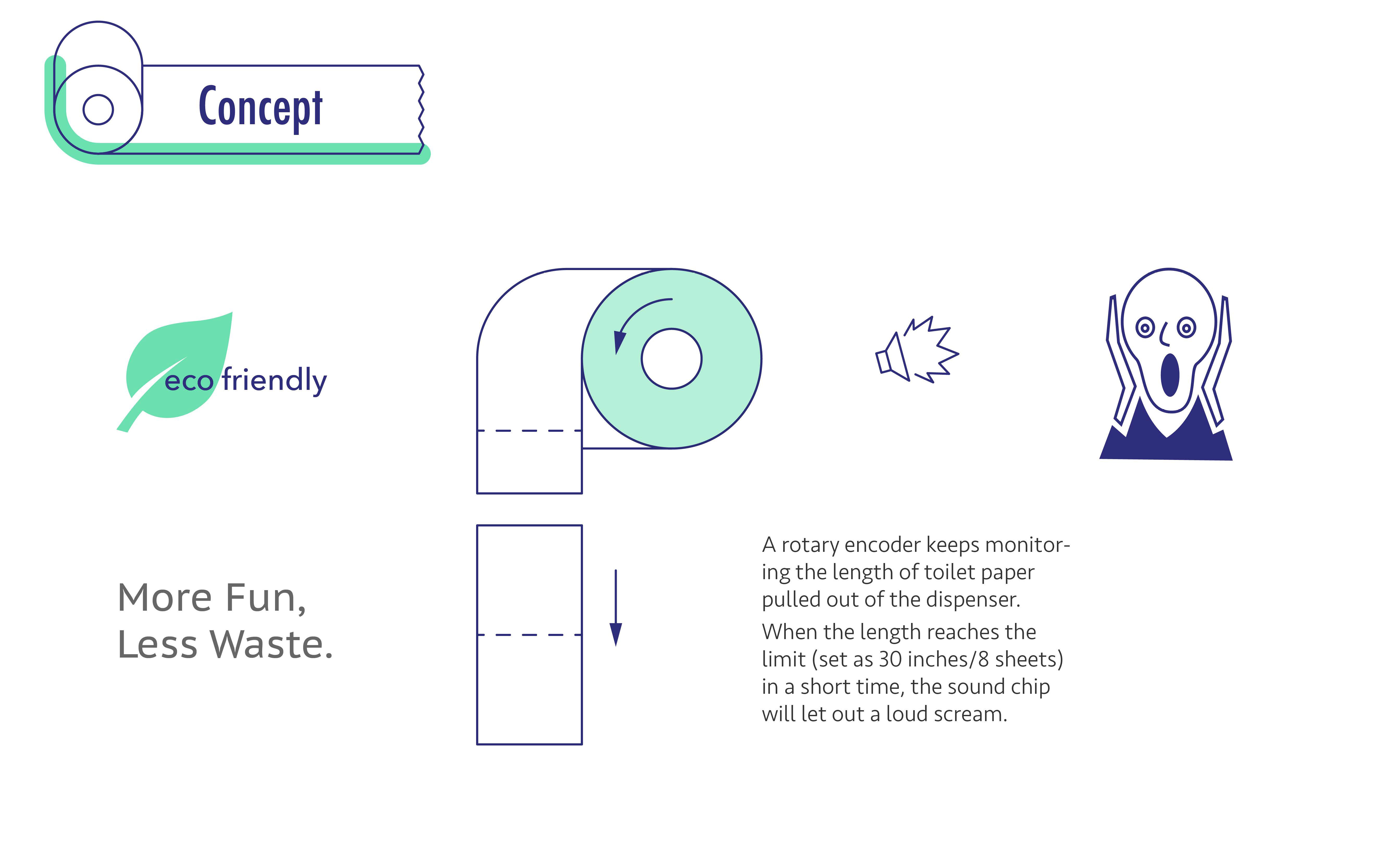

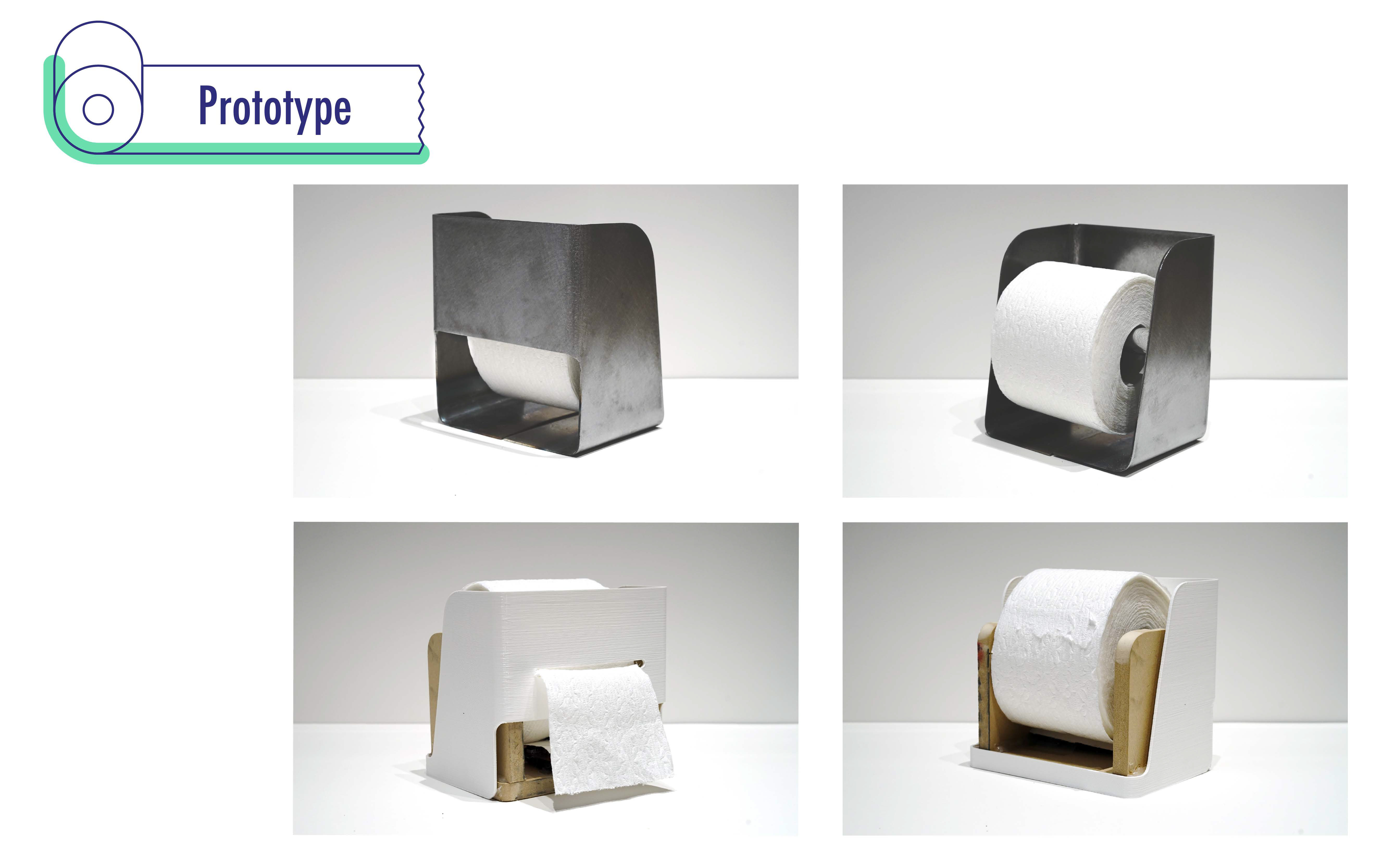

TP/Screamer

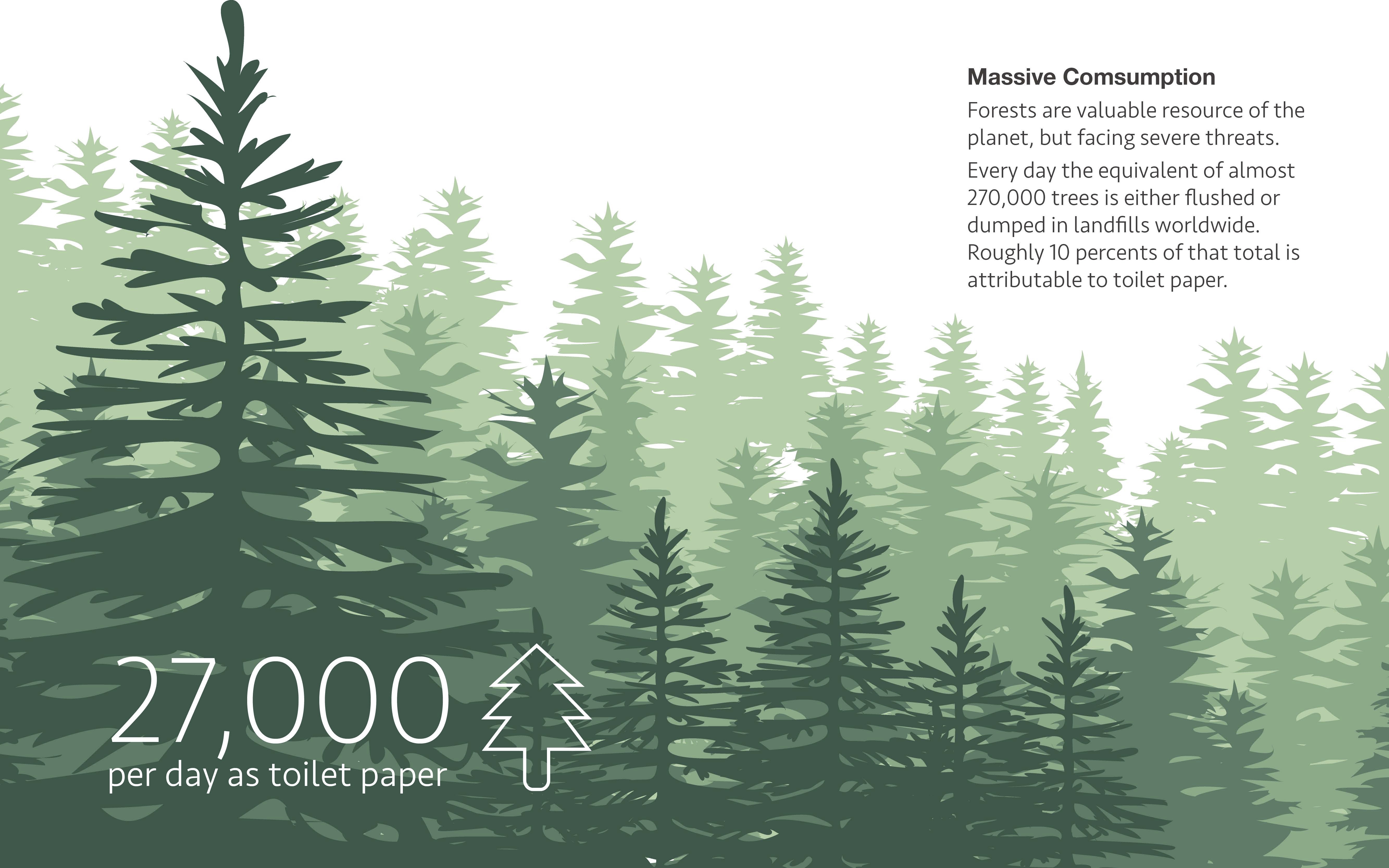

TP/Screamer (Toilet Paper Screamer), is a new eco-friendly toilet paper dispenser designed to reduce the waste of toilet paper. Every time when the sheets are pulled out over the limit (for instance, the length of 30 inches), it will let out voice warning to notify users to stop consuming more than necessity. It is good for both education and fun, and suitable for both public and home. The product is prepared for online crowdfunding.

- Designed the product independently, and fabricated an appearance prototype and a functional prototype.

- Finished branding materials including a pictured story and a promotional video.

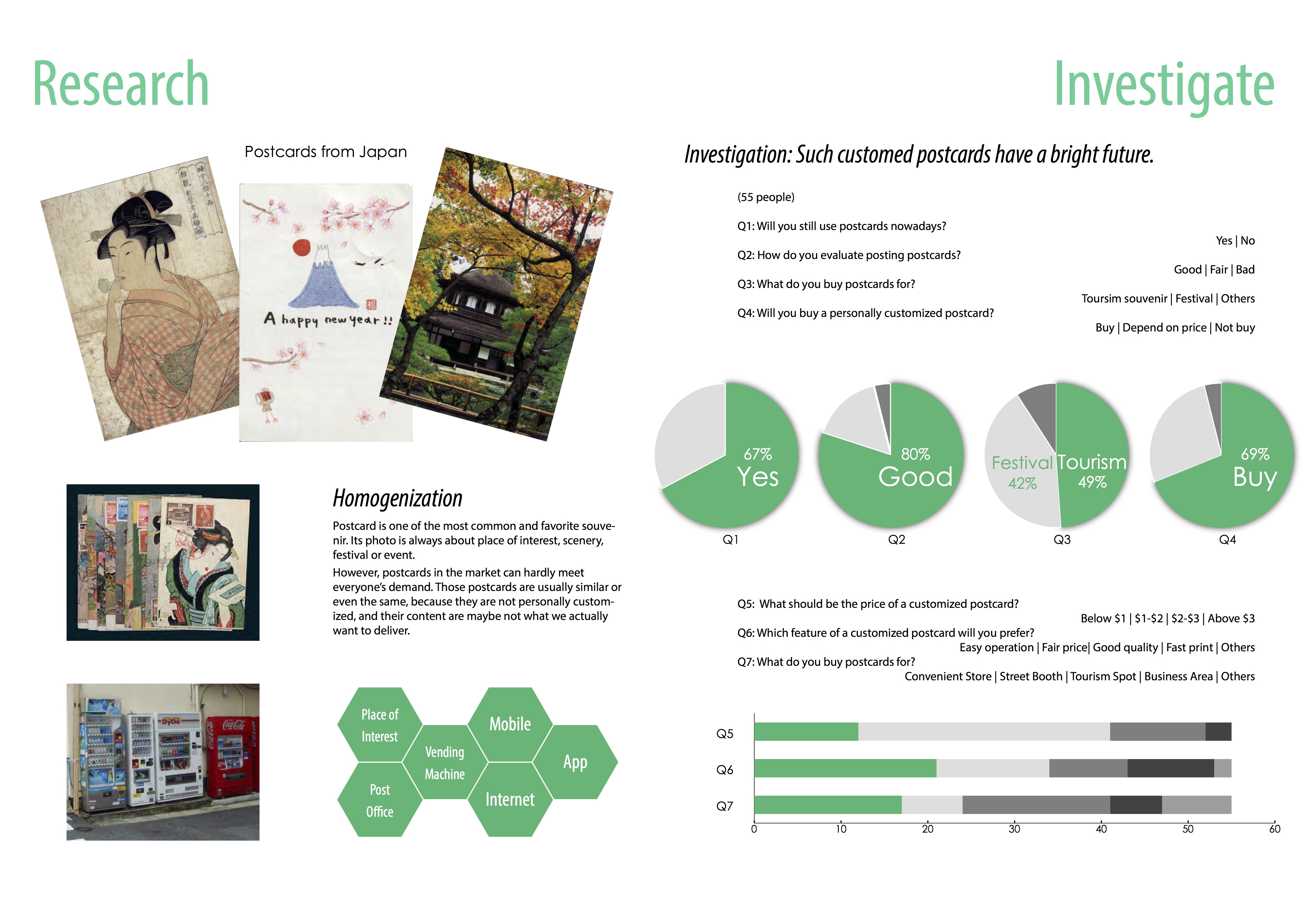

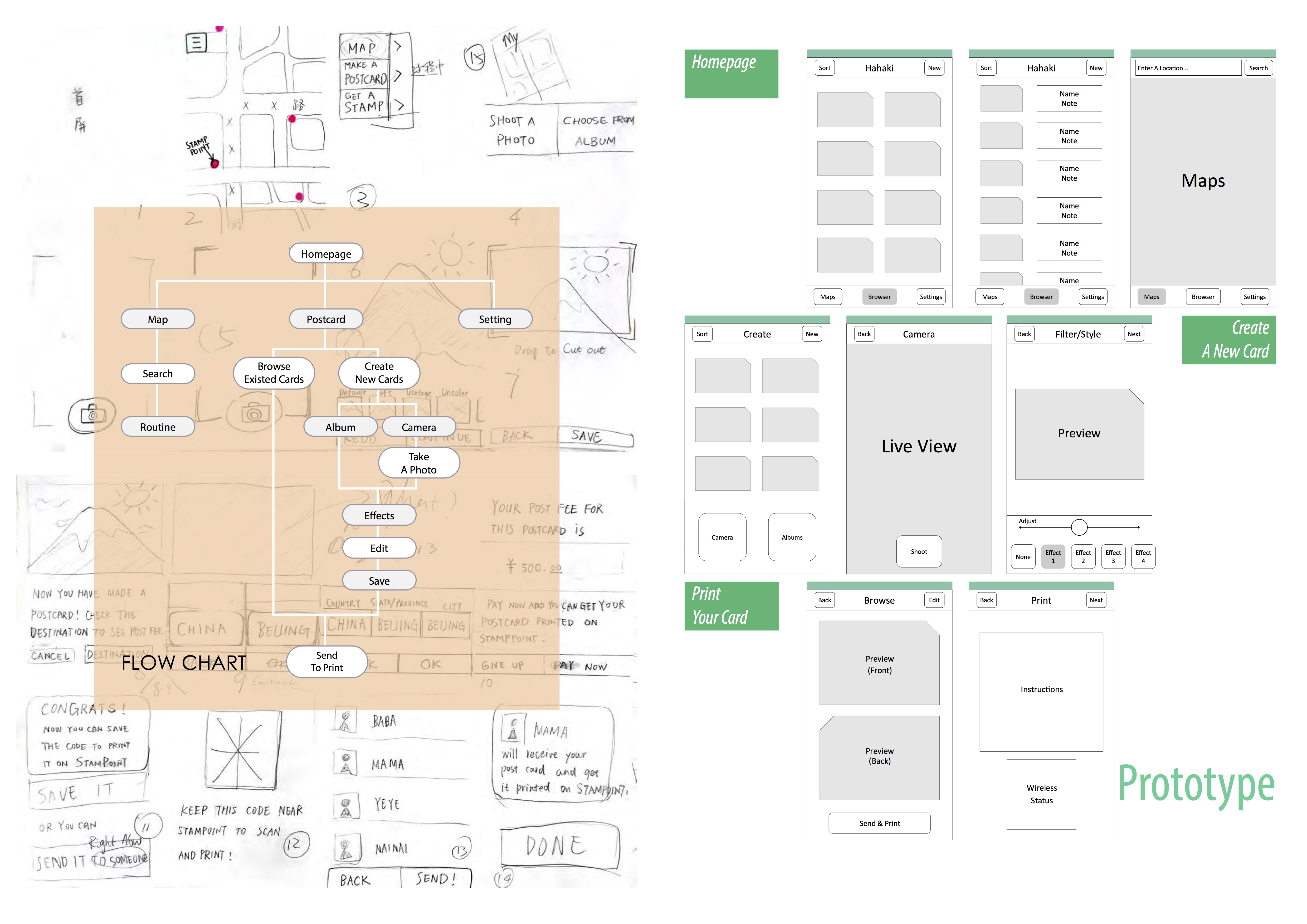

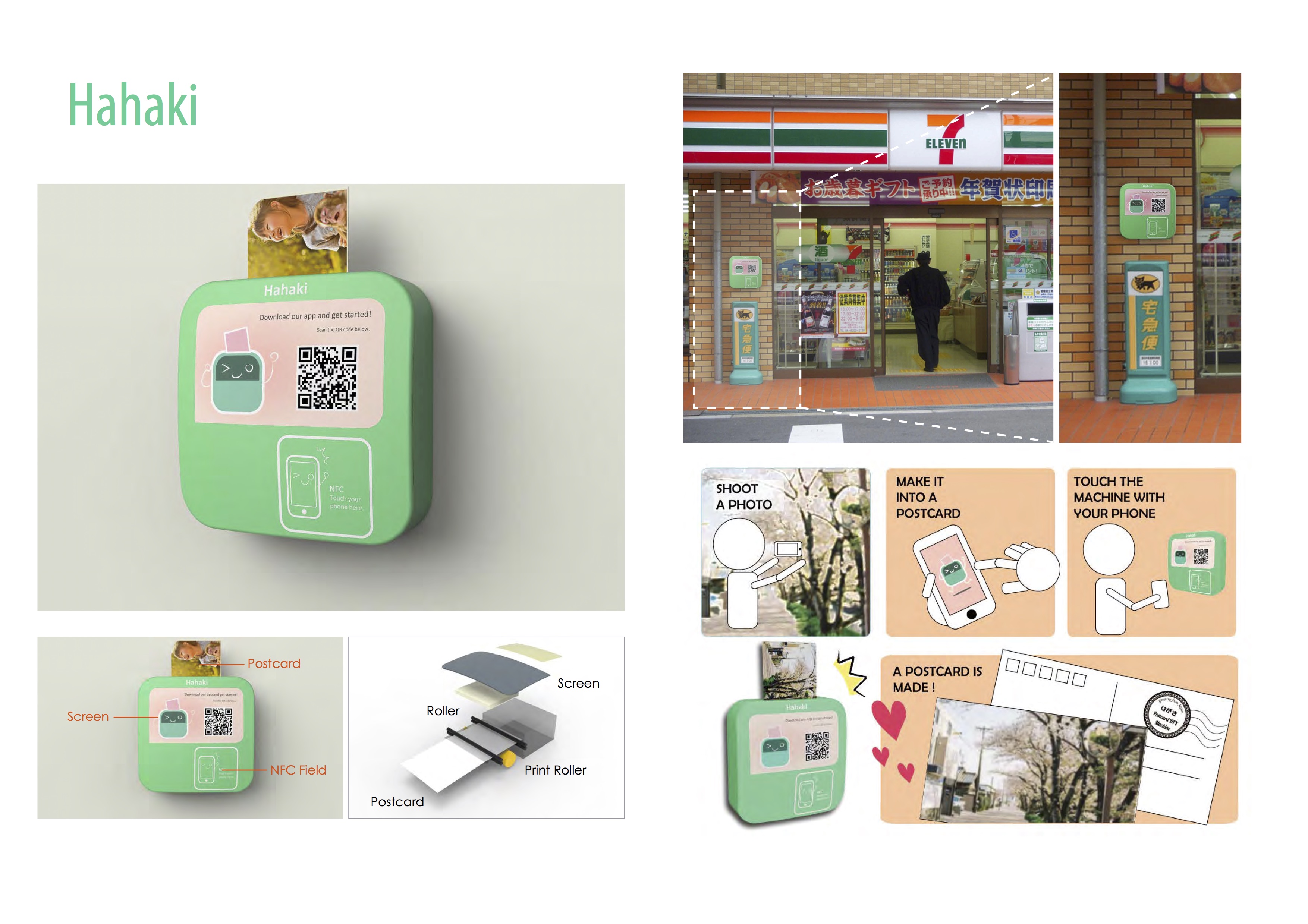

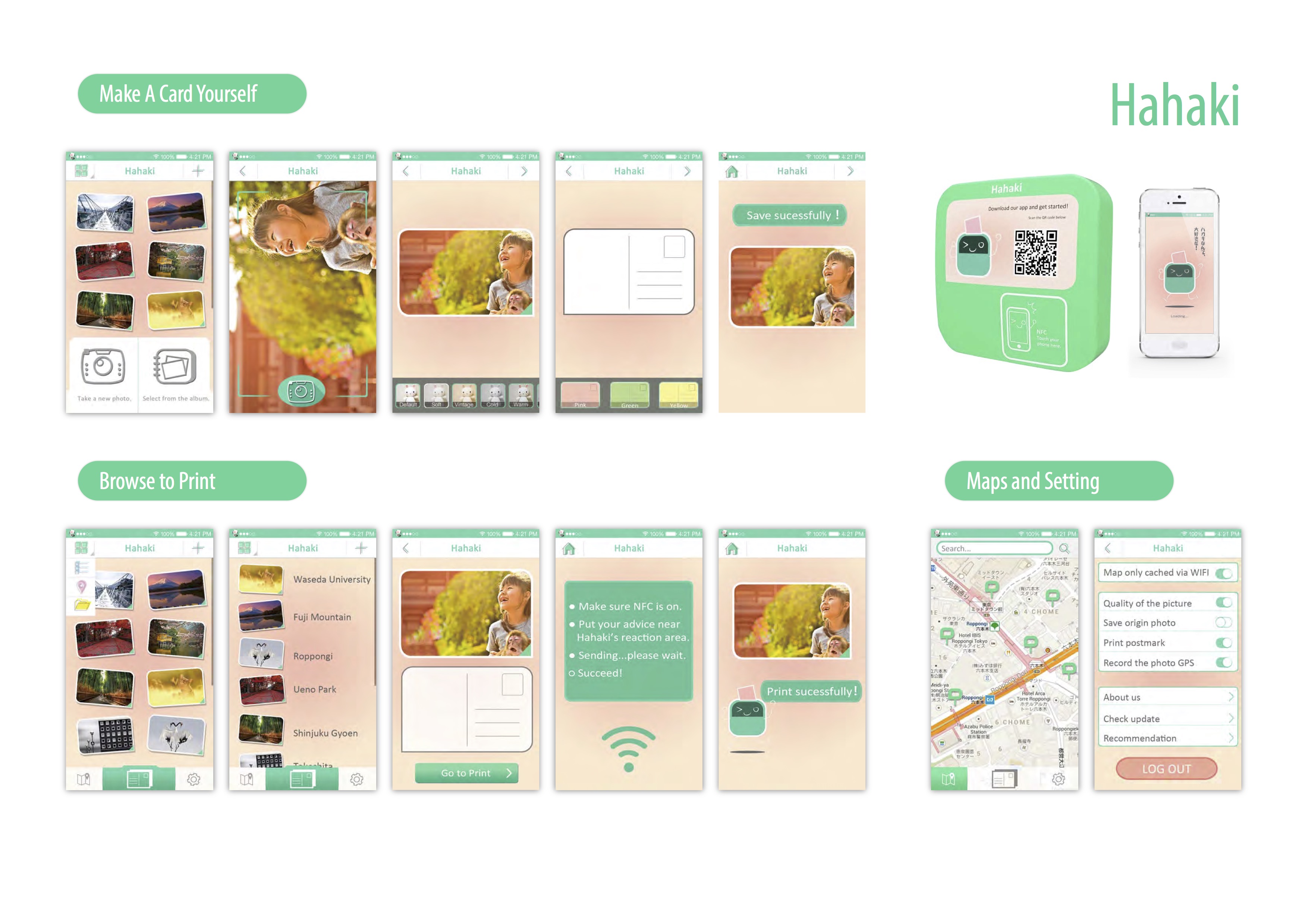

Hahaki

Hahaki is postcard customizable system for modern cities. It consists of a smartphone application and a printing machine. In the app, users can choose to edit the content and pin interesting information on the postcards. After customization, users may print them at the print machines placed near the scenic area, and then post. It was selected to “City, Museum, Tokyo” Design Exhibition in Tokyo, Japan, in December 2013.

- Led a group to finish the project.

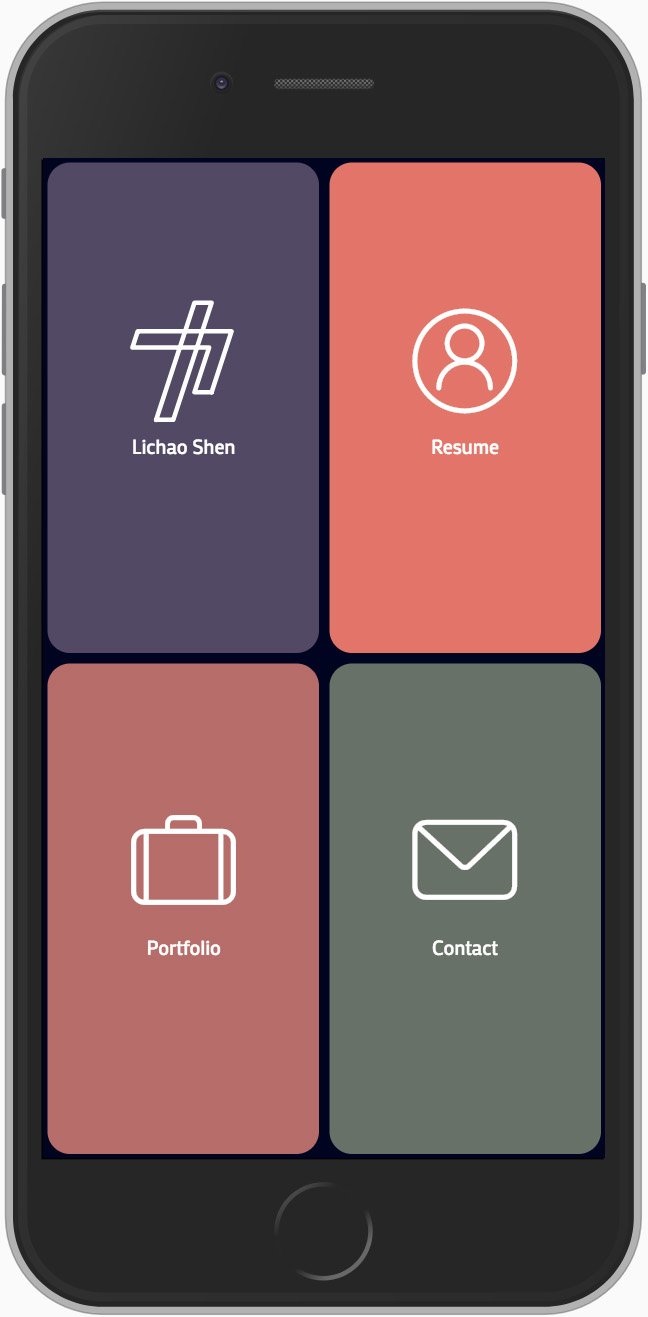

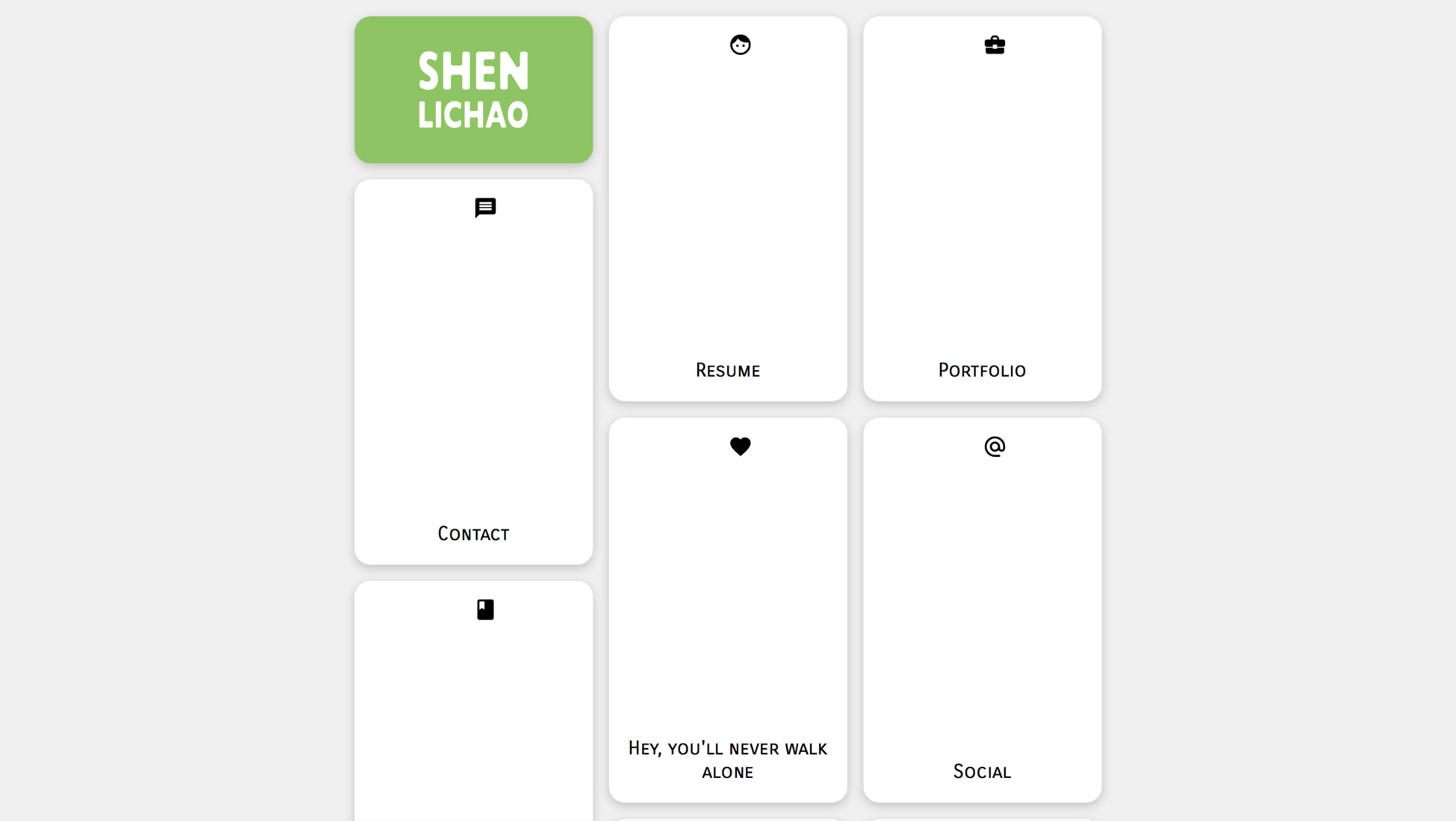

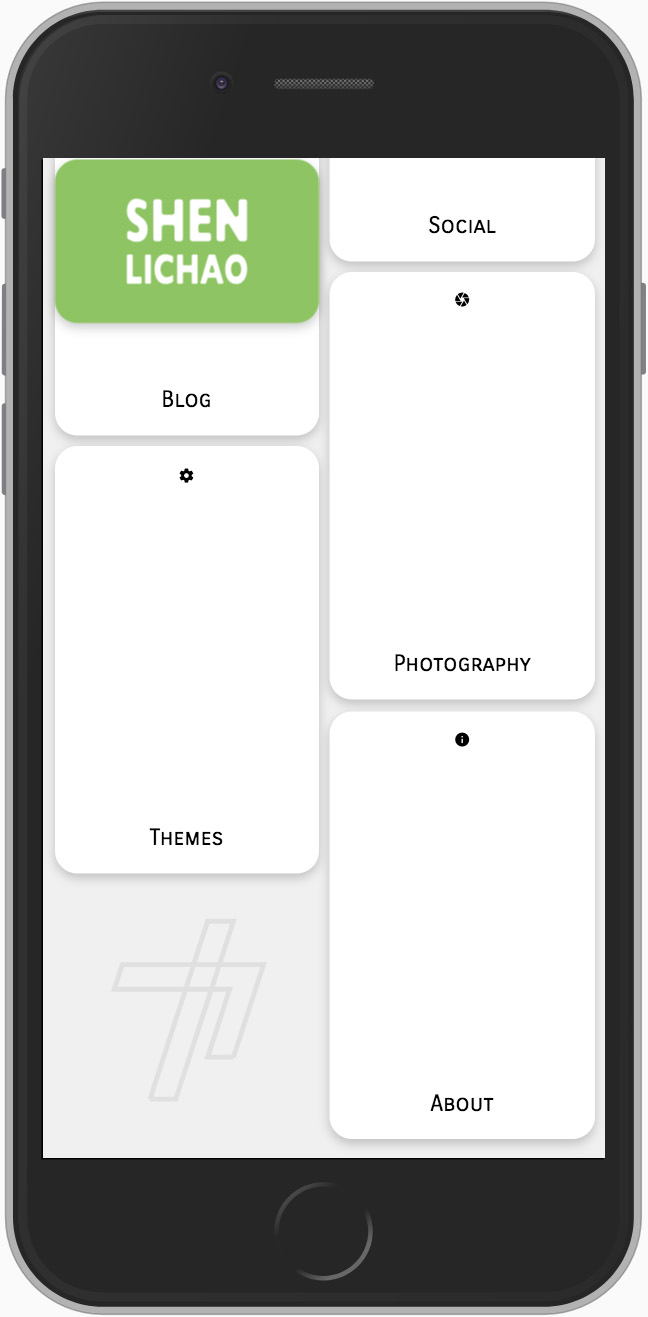

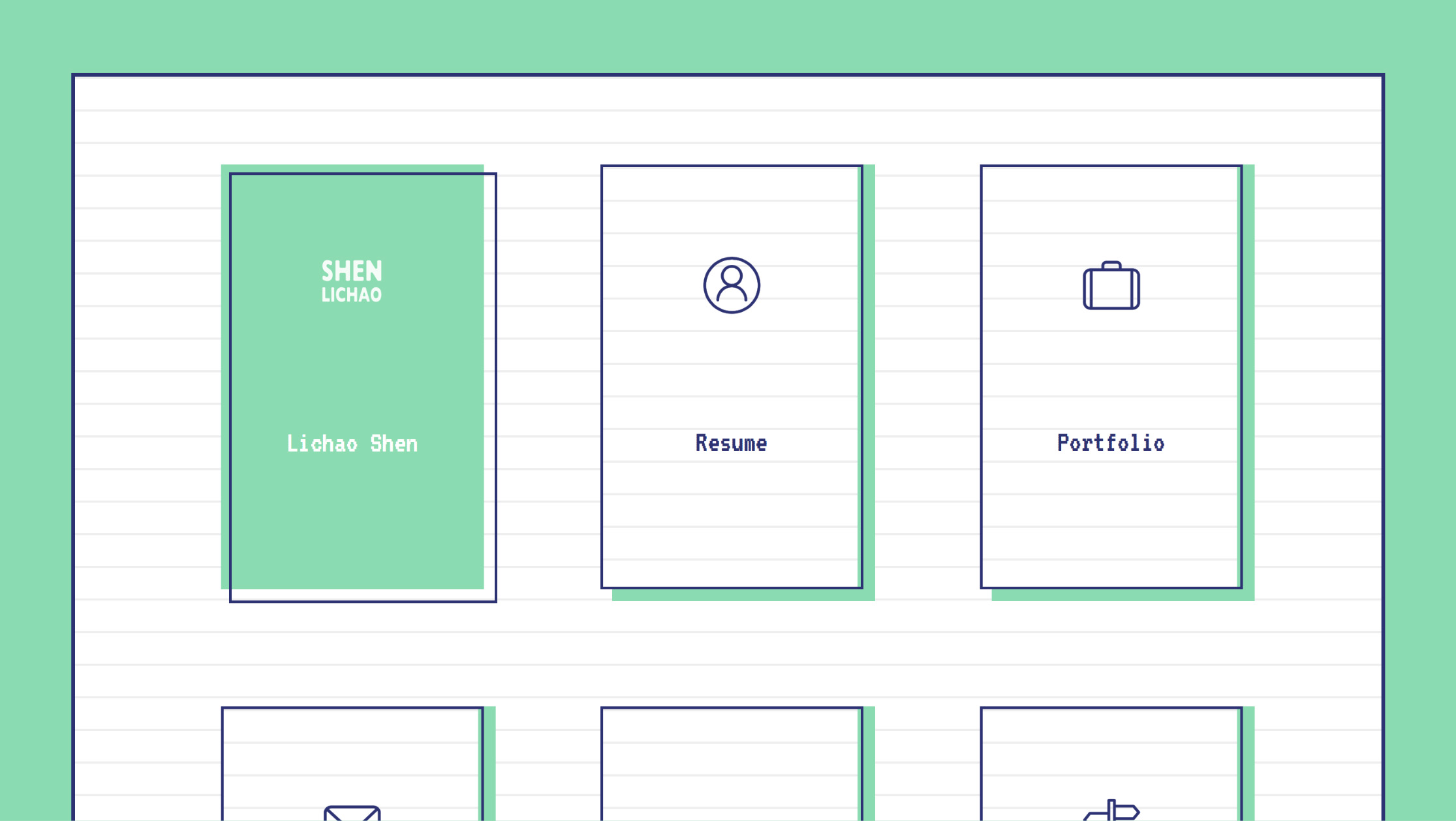

- Proposed the concept, and designed the demo, UX and UI of the mobile app and the printer.

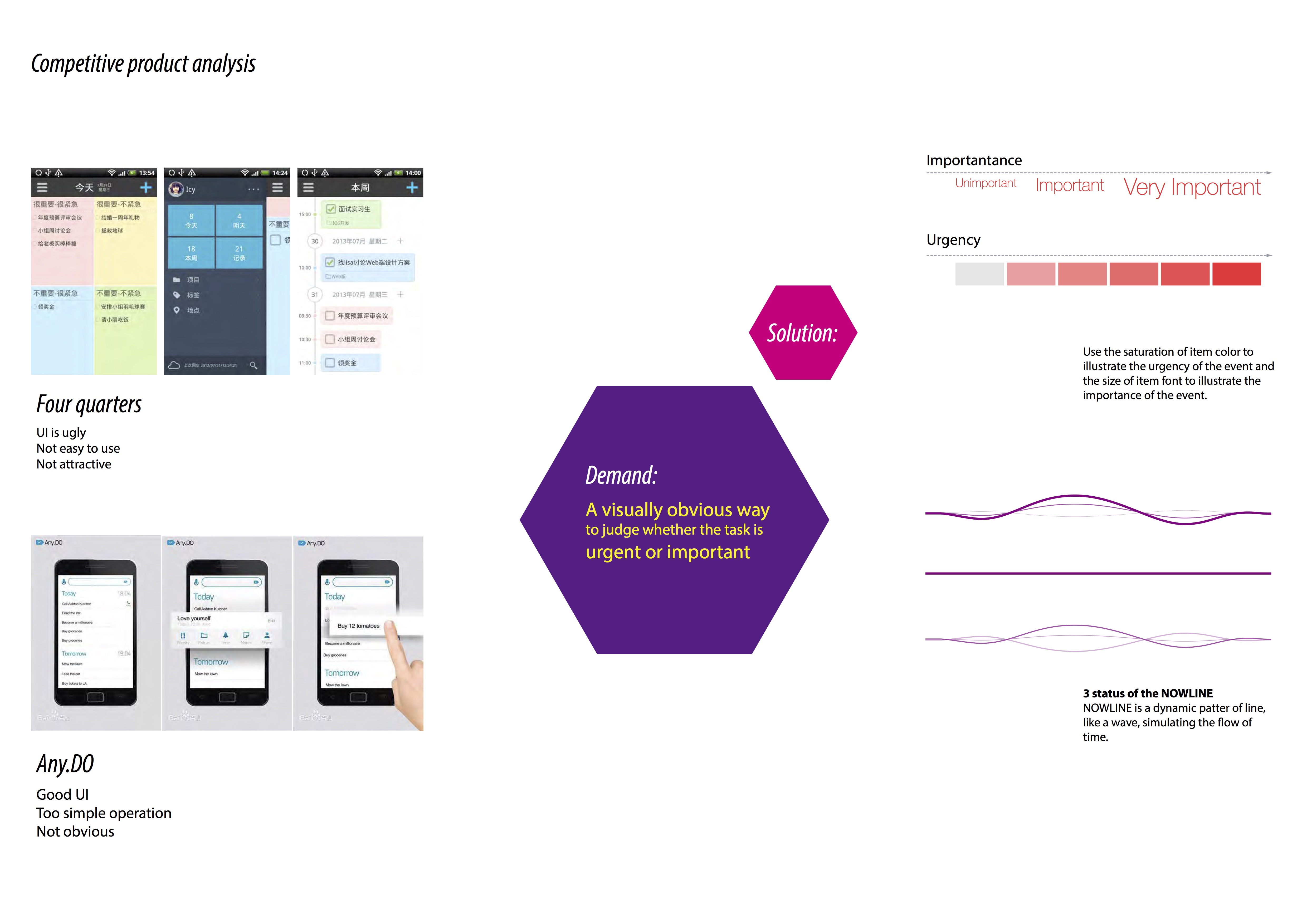

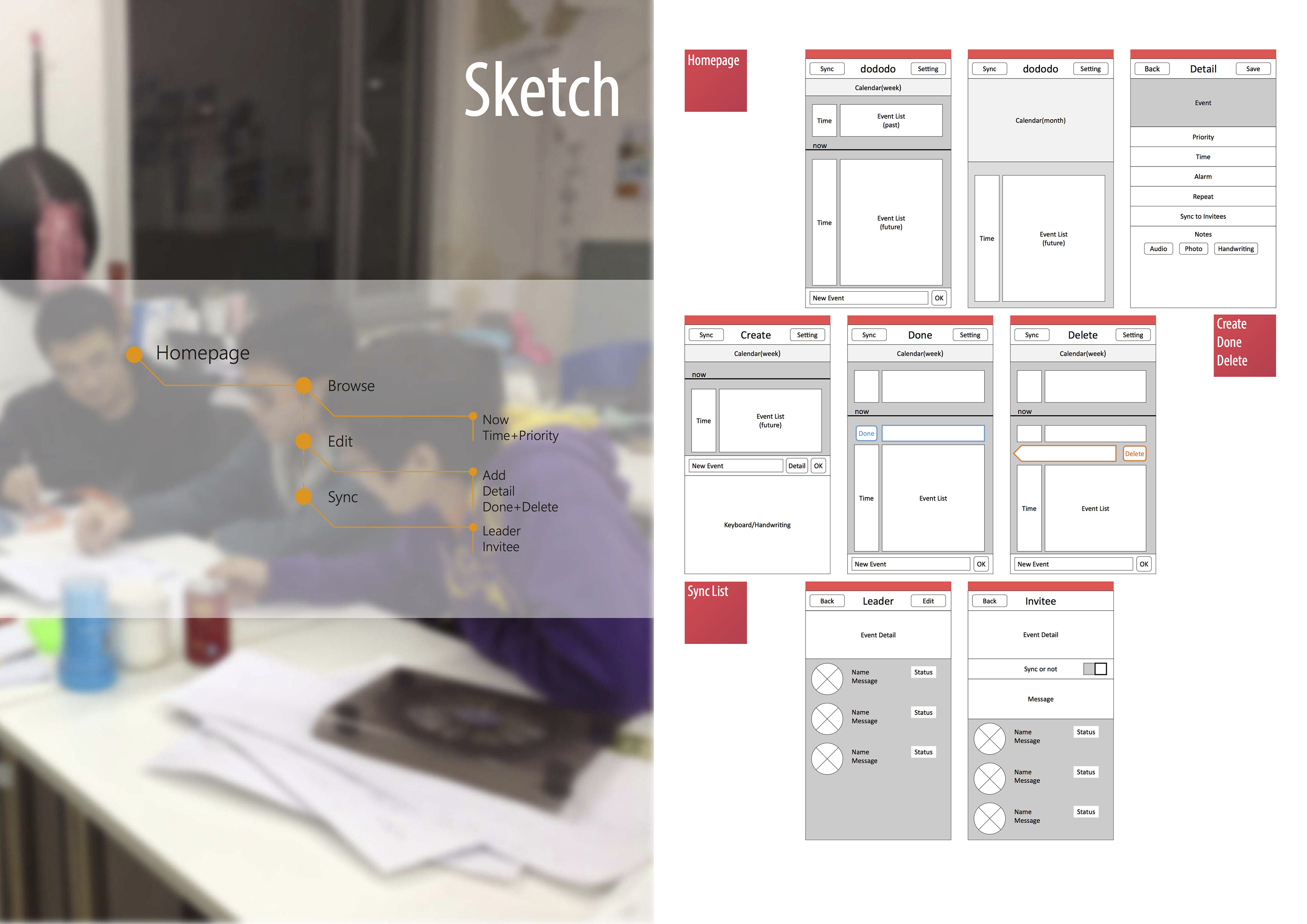

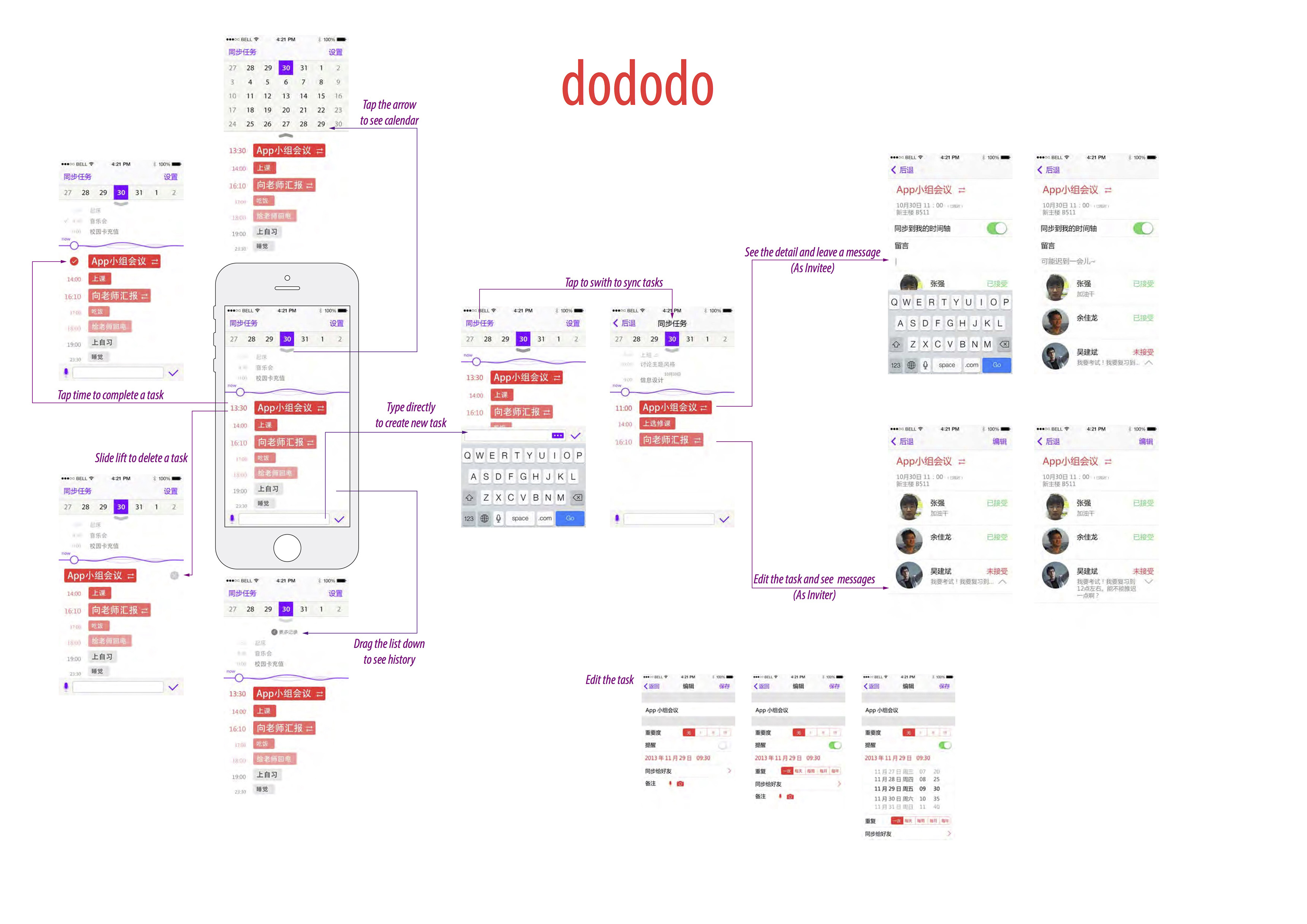

Dododo

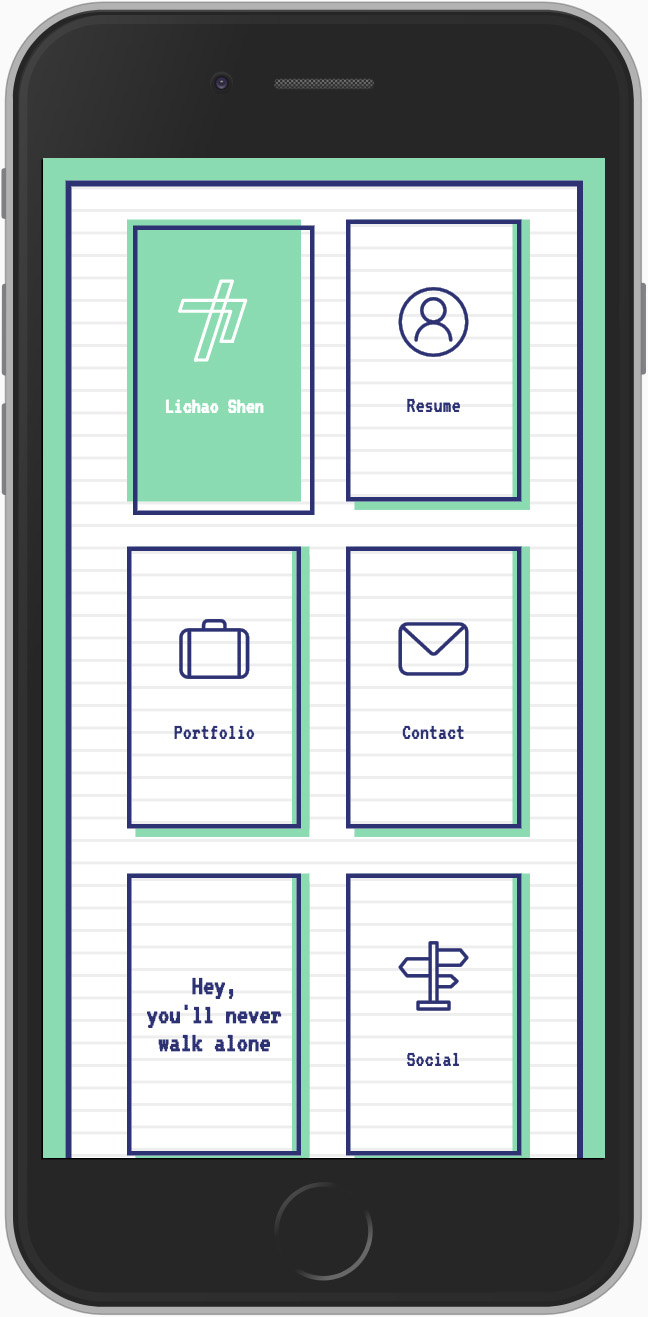

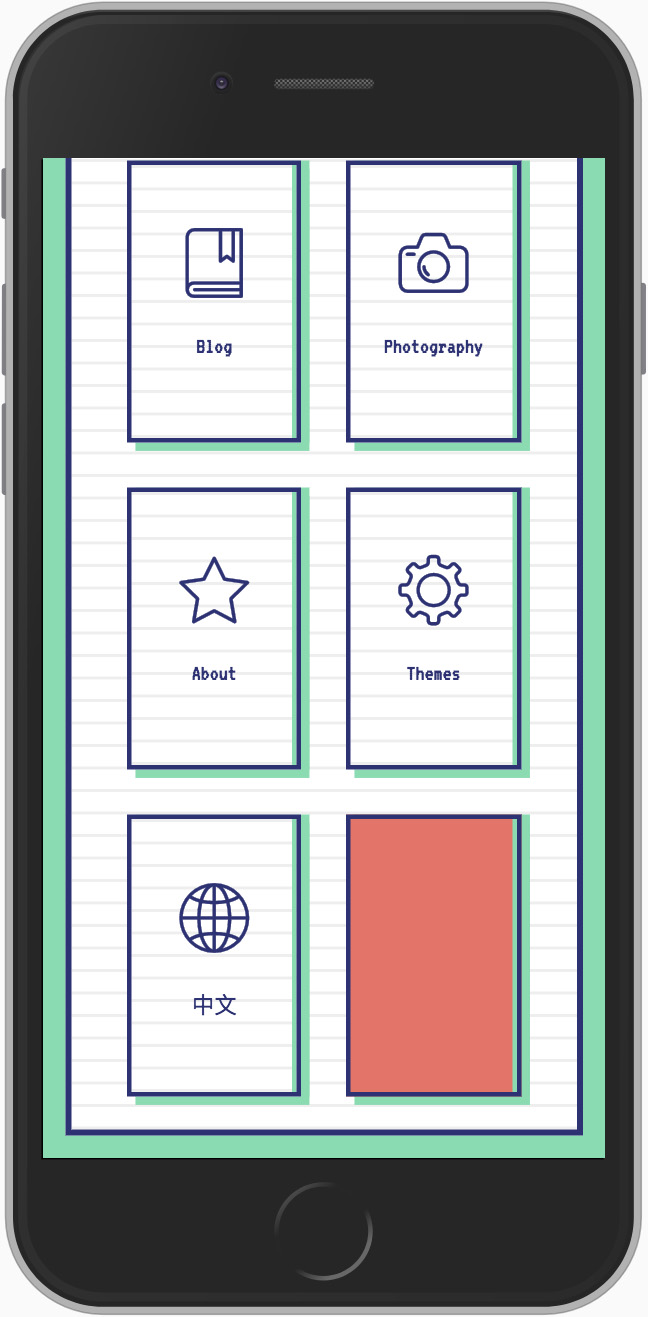

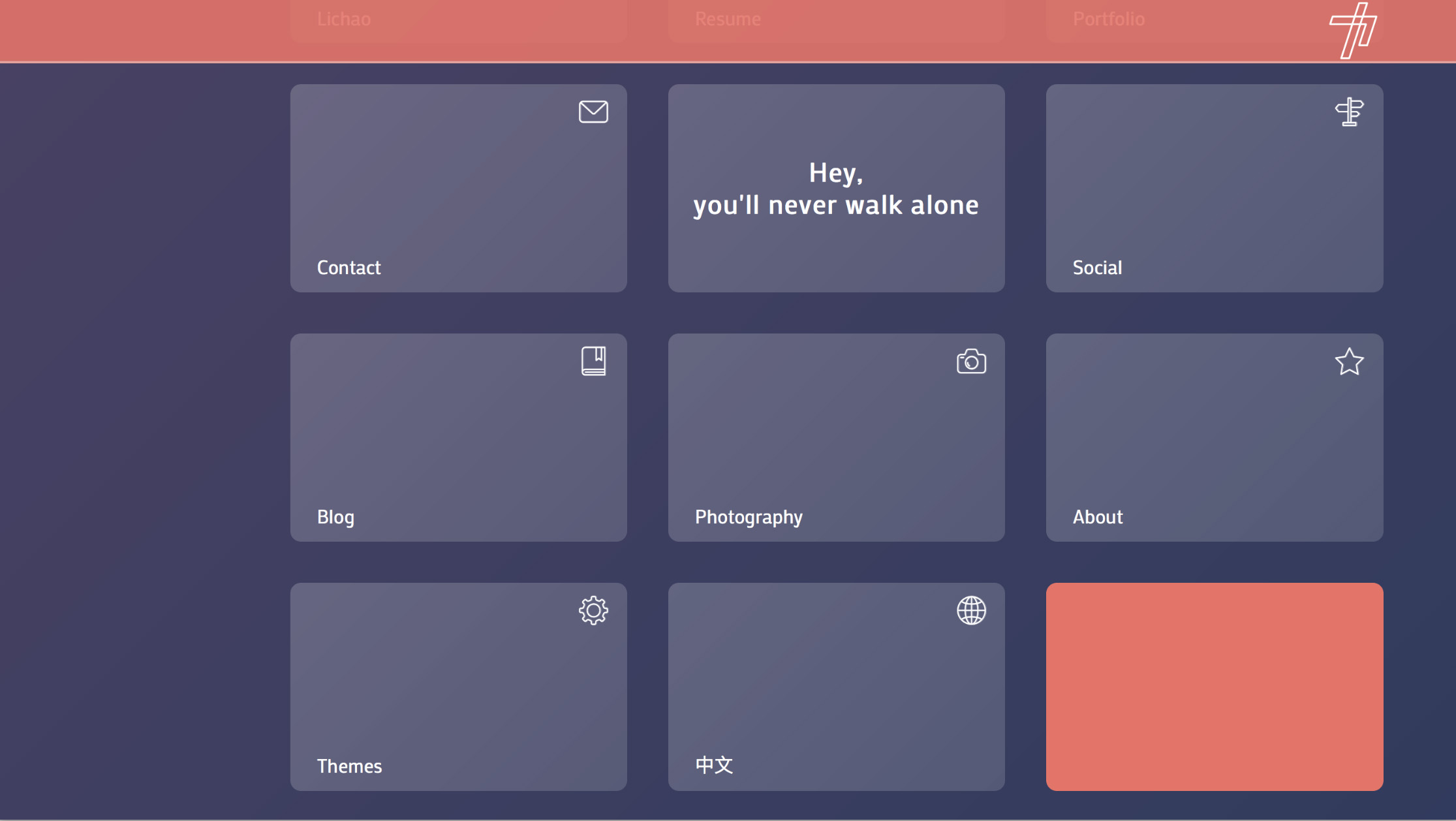

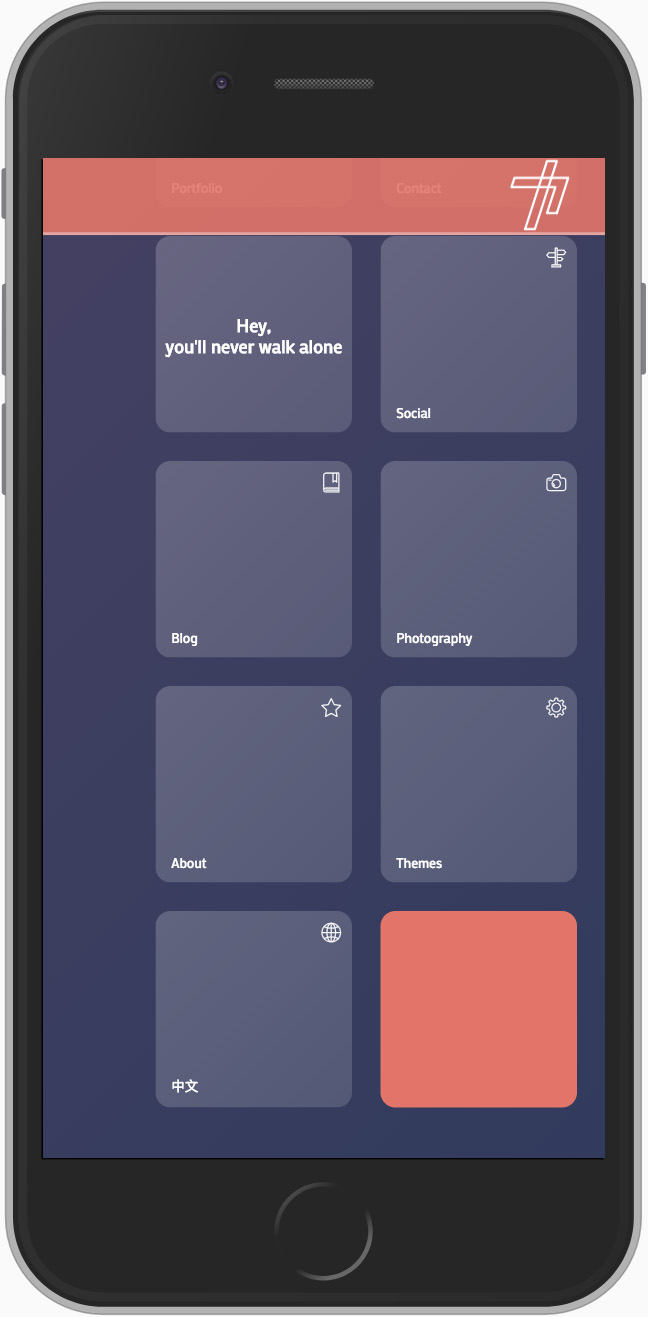

Dododo is an APP of to-do list with extra features of calendar and instant messaging. A new-designed visual identication system is equipped, which assit user to judge easier the degree of urgent or important of certain task, and then make choice. Also, its embed functions of instant-messaging and schedule sync can reduce the effort of topic-related communication and feedback.

- Led a group to finish the project.

- Proposed the concept, and designed the interaction structure and user interface.

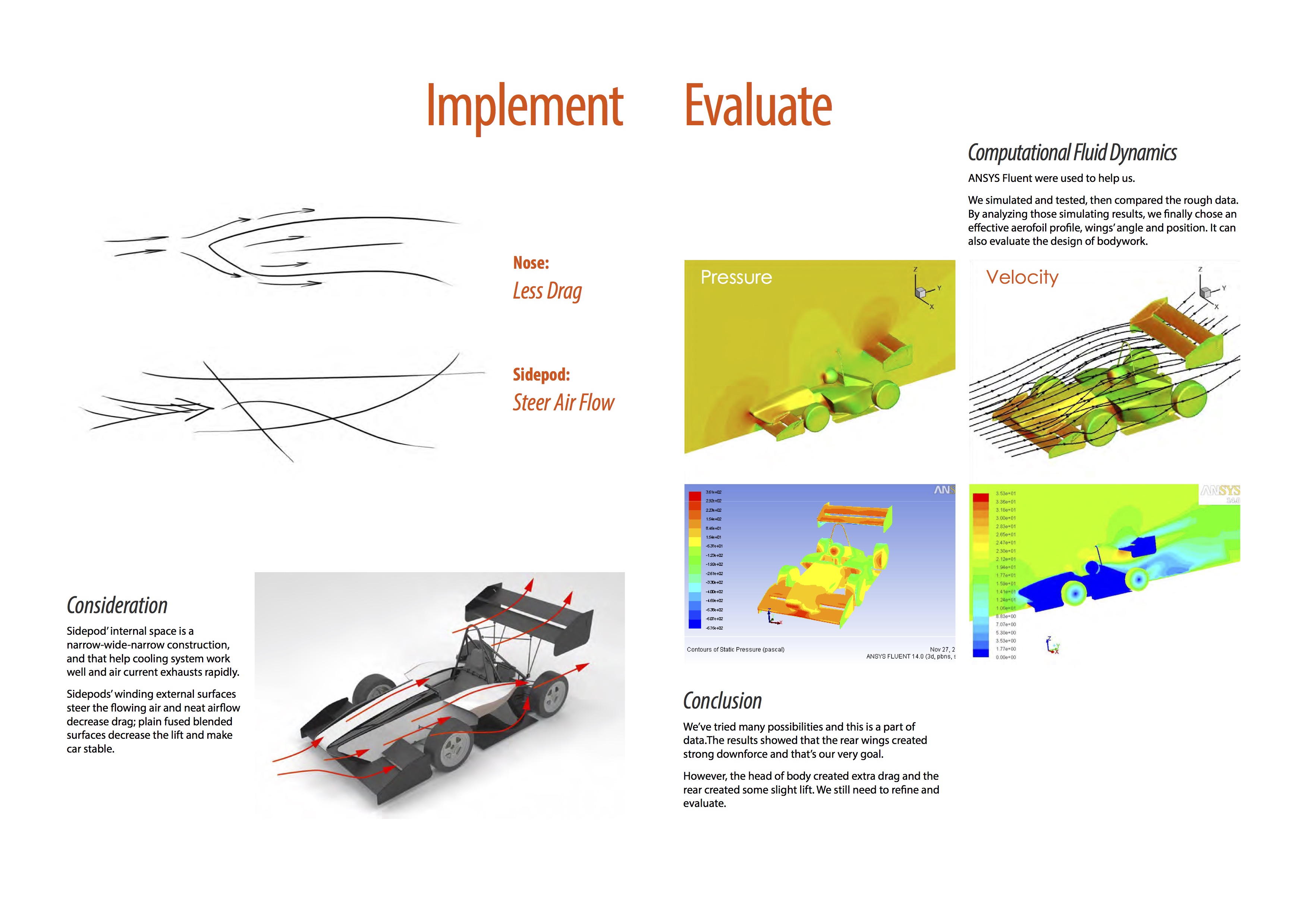

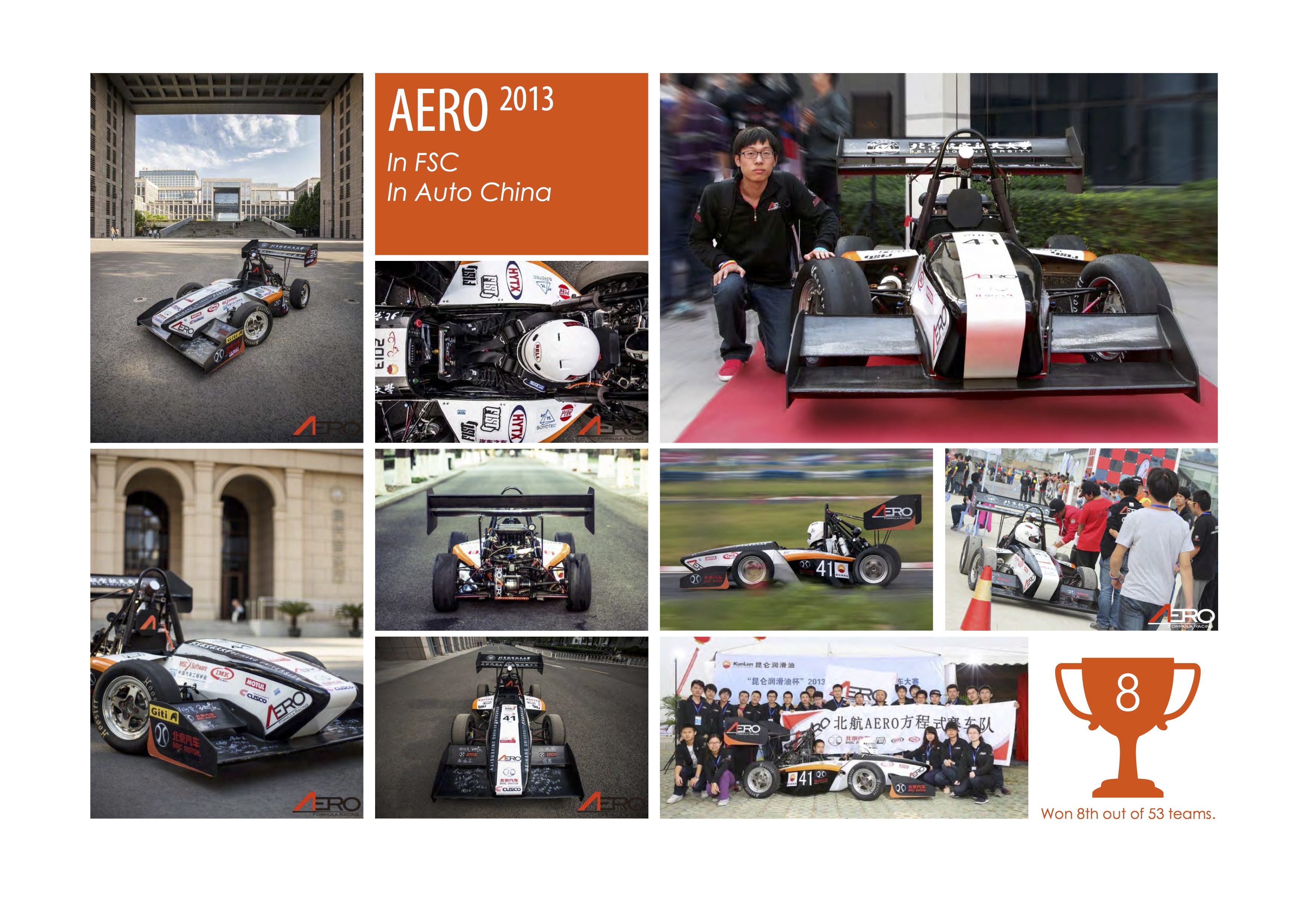

AERO-2013 Racing Car

AERO-2013 is the annual model of the AERO racing team, a student team. It is a racing car constructed for the Formula SAE series motor competitions. The design work is finished collaboratively based on the Formula SAE rules. Aerodynamic effect is considered and analyzed by computational fluid dynamics. It won 8th place out of 53 teams in the competition of Formula Student China 2013, and then was selected to the exhibition of Auto China 2014 by the sponsor.

- Designed the bodywork and aerodynamic kit.

- Participated in building the vehicle.